Advanced Python for Data Analysis: Expert Guide

In today’s data-driven world, mastering advanced Python for data analysis isn’t just a nice-to-have skill—it’s become absolutely essential for data professionals. As we navigate through 2024, the ability to harness Python’s advanced capabilities for complex data analysis has become a defining factor in the success of data scientists, analysts, and engineers.

Advanced Python for Data Analysis

Data Processing

Statistical Analysis

Machine Learning

Introduction

The Evolution of Python in Data Analysis

Python’s journey in data analysis has been nothing short of remarkable. From its humble beginnings as a general-purpose programming language, Python has evolved into the de facto standard for data analysis, thanks to its robust ecosystem of libraries and frameworks. According to the Python Developer Survey 2023, over 75% of data professionals consider Python their primary language for data analysis.

Python has become the lingua franca of data science, combining powerful analytical capabilities with unmatched ease of use.

Wes McKinney, Creator of pandas

Why Advanced Python Skills Matter

In the current data landscape, basic Python knowledge isn’t enough. Here’s why advanced skills are crucial:

- Data Volume Complexity: Modern datasets have grown exponentially, requiring sophisticated handling techniques

- Performance Demands: Organizations need fast, efficient analysis of large-scale data

- Integration Requirements: Advanced skills enable seamless integration with various data sources and tools

- Automation Needs: Complex data pipelines require advanced programming knowledge

Let’s look at the key areas where advanced Python skills make a difference:

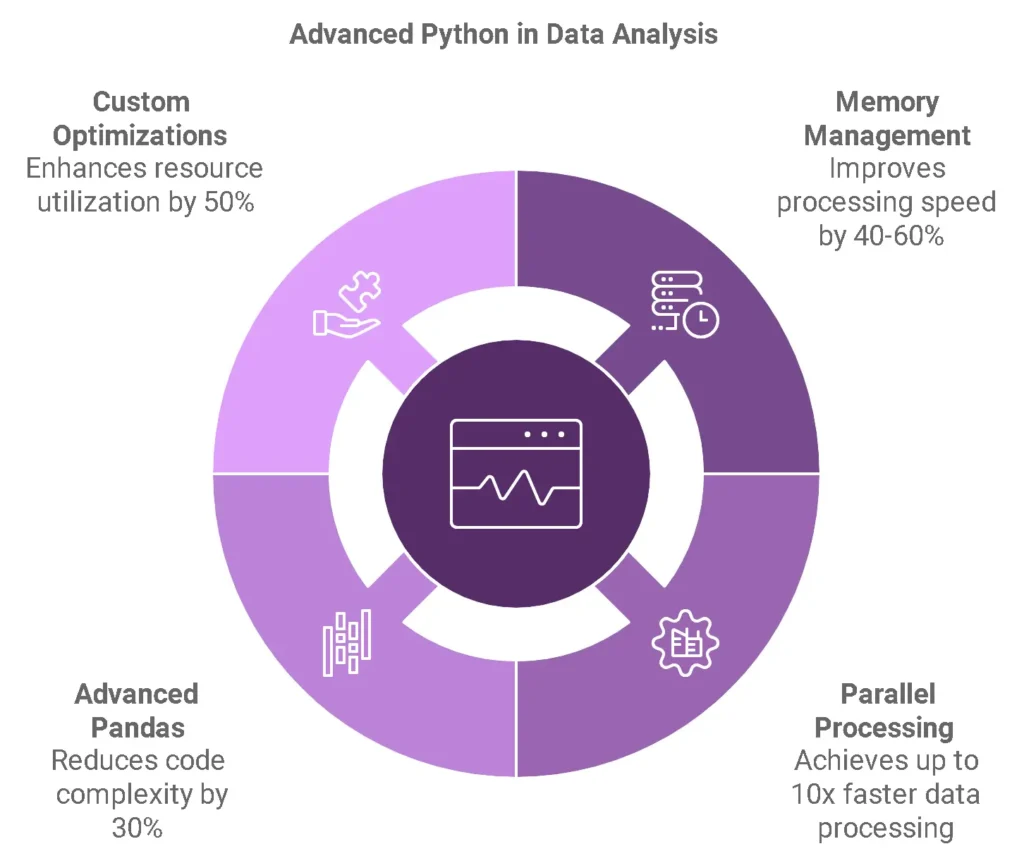

| Skill Area | Impact on Data Analysis | Business Value |

| Memory Management | 40-60% improvement in processing speed | Faster insights delivery |

| Parallel Processing | Up to 10x faster data processing | Reduced computational costs |

| Advanced Pandas | 30% reduction in code complexity | More maintainable solutions |

| Custom Optimizations | 50% better resource utilization | Improved scalability |

Who This Guide Is For

This comprehensive guide is designed for:

- Data Scientists looking to optimize their analysis workflows

- Data Engineers seeking to build efficient data pipelines

- Analysts wanting to level up their Python skills

- Software Engineers transitioning to data-focused roles

Prerequisites

Before diving into advanced concepts, ensure you have:

✓ Basic Python programming knowledge

✓ Familiarity with fundamental data analysis concepts

✓ Understanding of basic statistics

✓ Python 3.9+ installed (Download Python)

✓ Basic experience with pandas and NumPy

What You’ll Learn

This guide will take you through:

- Advanced Python fundamentals specifically tailored for data analysis

- High-performance data processing techniques

- Complex data manipulation strategies

- Scale-efficient data cleaning methods

- Advanced analytical techniques and visualizations

# Quick setup check

import sys

import pandas as pd

import numpy as np

print(f"Python version: {sys.version}")

print(f"pandas version: {pd.__version__}")

print(f"NumPy version: {np.__version__}")Environment Setup Checker

For hands-on practice, I recommend setting up a virtual environment:

python -m venv advanced_analysis

source advanced_analysis/bin/activate # On Windows: advanced_analysis\Scripts\activate

pip install pandas numpy scipy matplotlib seabornGetting Started Resources

To supplement this guide, consider these additional resources:

- Real Python’s Advanced Python Tutorials

- Python Data Science Handbook

- pandas Documentation

- NumPy Documentation

In the next section, we’ll dive deep into advanced Python fundamentals specifically tailored for data analysis, starting with functional programming concepts that will transform how you handle data.

[Continue reading about Advanced Python Fundamentals for Data Analysis →]

Read also : Advanced Python Programming Challenges: Level Up Your Coding Skills

Advanced Python Fundamentals for Data Analysis

As data volumes grow exponentially, mastering advanced Python fundamentals becomes crucial for efficient data analysis. Let’s dive into the powerful features that can revolutionize your data processing workflows.

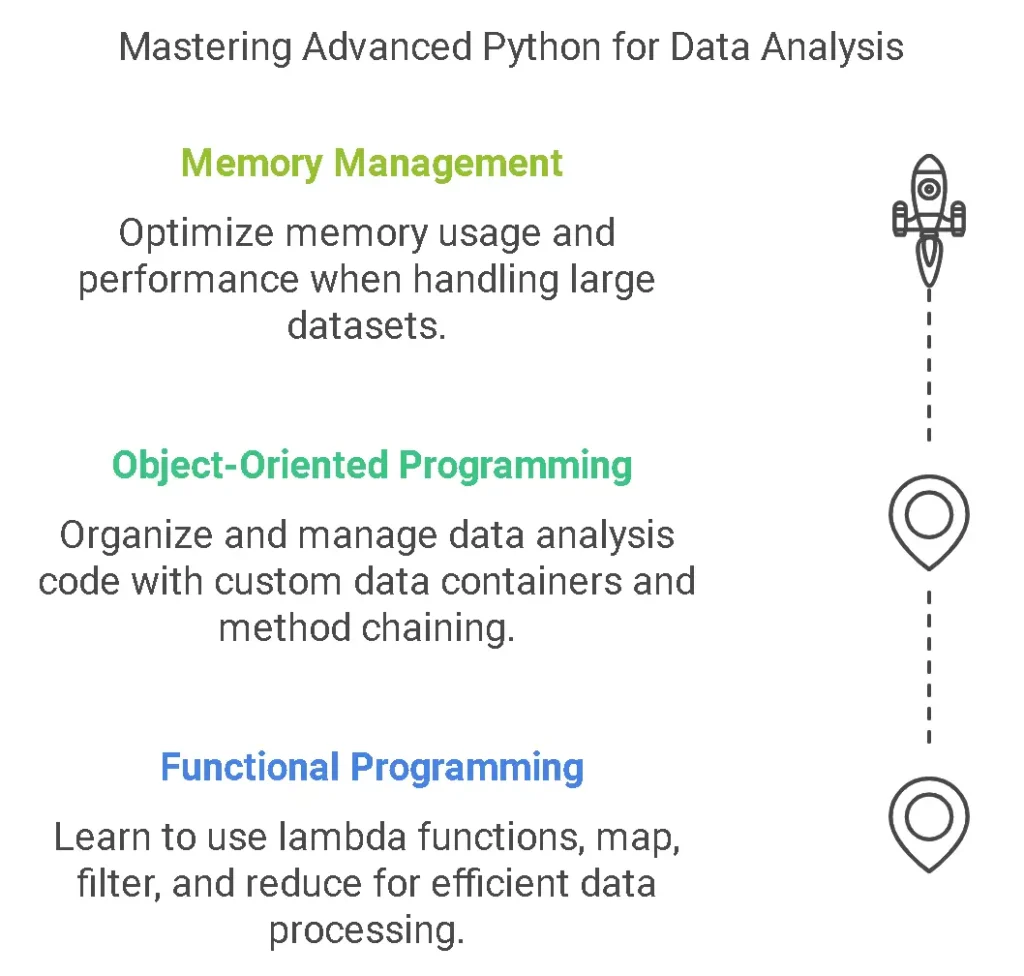

Functional Programming Concepts

Functional programming in Python offers elegant solutions for data manipulation, making your code more readable and maintainable. Let’s explore the key concepts:

Lambda Functions: Your Secret Weapon for Quick Data Transformations

# Traditional function

def square(x): return x**2

# Lambda equivalent

square = lambda x: x**2

# Real-world data analysis example

import pandas as pd

df = pd.DataFrame({'values': [1, 2, 3, 4, 5]})

df['squared'] = df['values'].apply(lambda x: x**2)While lambda functions are powerful, use them judiciously. For complex operations, traditional functions offer better readability and debugging capabilities.

Map, Filter, and Reduce: The Data Processing Trinity

| Function | Purpose | Best Use Case |

| map() | Transform each element in an iterable | Simple element-wise transformations |

| filter() | Select elements based on a condition | Data filtering without complex logic |

| reduce() | Aggregate elements to a single value | Running calculations on sequences |

from functools import reduce

# Map example for data transformation

data = [1, 2, 3, 4, 5]

squared = list(map(lambda x: x**2, data))

# Filter example for data selection

even_numbers = list(filter(lambda x: x % 2 == 0, data))

# Reduce example for aggregation

total = reduce(lambda x, y: x + y, data)List Comprehensions and Generator Expressions

# List comprehension for data transformation

numbers = [1, 2, 3, 4, 5]

squares = [x**2 for x in numbers if x > 2]

# Generator expression for memory efficiency

big_data = (x**2 for x in range(1000000) if x % 2 == 0)Use generator expressions when working with large datasets to avoid memory overload. They process elements one at a time rather than creating a full list in memory.

Object-Oriented Programming in Data Analysis

Object-oriented programming (OOP) provides powerful tools for organizing and managing data analysis code. Here’s how to leverage it effectively:

Custom Data Containers

class DatasetContainer:

def __init__(self, data):

self.data = data

self._validate_data()

def _validate_data(self):

# Custom validation logic

pass

def transform(self):

# Custom transformation logic

return self.data.apply(self._transform_function)

def _transform_function(self, x):

return x * 2Method Chaining for Elegant Data Processing

class DataAnalyzer:

def __init__(self, data):

self.data = data

def clean(self):

# Cleaning logic

return self

def transform(self):

# Transformation logic

return self

def analyze(self):

# Analysis logic

return self

# Usage

results = DataAnalyzer(raw_data)\

.clean()\

.transform()\

.analyze()Magic Methods for Data Manipulation

class DataMatrix:

def __init__(self, data):

self.data = data

def __add__(self, other):

return DataMatrix(self.data + other.data)

def __getitem__(self, idx):

return self.data[idx]

def __len__(self):

return len(self.data)Advanced Python Memory Management

Efficient memory management is crucial when working with large datasets. Here’s how to optimize your Python code for better performance:

Memory Optimization Techniques

| Technique | Description | Performance Impact |

| Use generators | Process data in chunks | Reduced memory usage |

| NumPy arrays | Efficient numerical operations | Faster computation |

| Memory mapping | Access files without loading into RAM | Handles large files efficiently |

Garbage Collection in Data Processing

import gc

def process_large_dataset(data):

# Process data in chunks

for chunk in data:

process_chunk(chunk)

# Force garbage collection

gc.collect()Managing Large Datasets Efficiently

import pandas as pd

# Read CSV in chunks

chunk_size = 10000

for chunk in pd.read_csv('large_file.csv', chunksize=chunk_size):

# Process each chunk

process_chunk(chunk)

# Clear memory

del chunk

gc.collect()Read also :

- Python Variables & Data Types: Guide with 50+ Examples

- Master Python Loops & Functions: Beginner’s Best Guide

- Achieve Real-Time Analytics Excellence: Your Data Team Guide

- Extract Data From PDF Files Using Python Seamlessly

- Python TDD Mastery: Guide to Expert Development

High-Performance Data Processing: Mastering Advanced Python Techniques

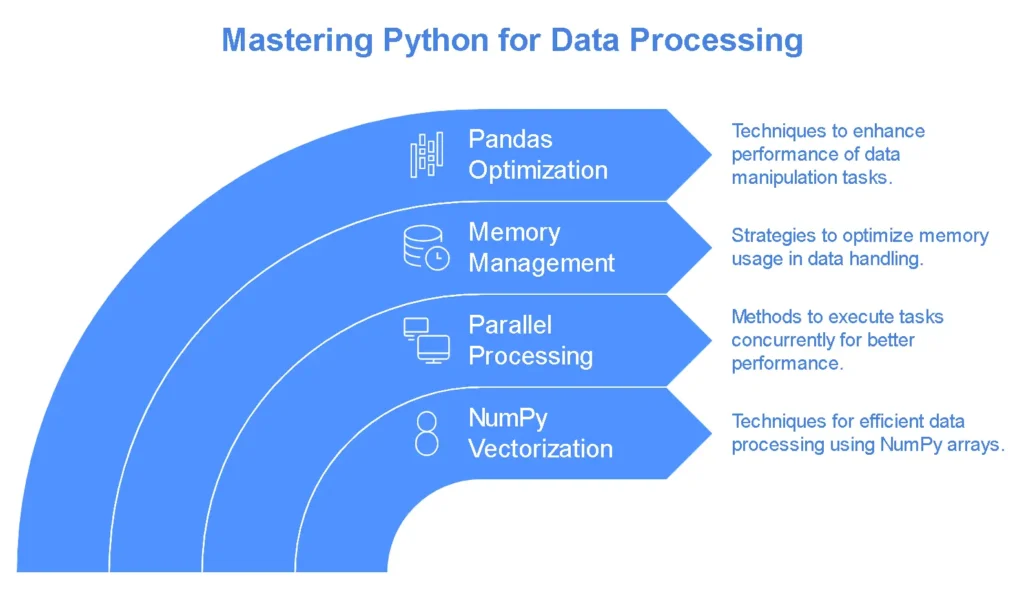

Vectorization Techniques with NumPy

Let’s dive into how you can supercharge your data processing capabilities using NumPy’s vectorization features. Vectorization isn’t just a buzzword – it’s your secret weapon for processing large datasets efficiently.

Advanced Array Operations

NumPy’s array operations are blazingly fast compared to traditional Python loops. Here’s a performance comparison:

Let’s look at some practical examples of advanced array operations:

import numpy as np

# Advanced indexing with boolean masks

data = np.random.randn(1000000)

mask = (data > 0) & (data < 1)

filtered_data = data[mask]

# Fast statistical operations

mean = np.mean(data, axis=0)

std = np.std(data, axis=0)

zscore = (data - mean) / std

# Efficient matrix operations

A = np.random.rand(1000, 1000)

B = np.random.rand(1000, 1000)

C = np.dot(A, B) # Much faster than nested loopsBroadcasting: The Art of Efficient Array Operations

Broadcasting is one of NumPy’s most powerful features. It allows you to perform operations on arrays of different shapes without explicitly replicating data.

Here’s an interactive visualization to understand broadcasting:

Let’s see broadcasting in action:

# Broadcasting example

prices = np.array([10, 20, 30]).reshape(3, 1) # Column vector

quantities = np.array([1, 2, 3, 4]) # Row vector

total_sales = prices * quantities # Broadcasting creates a 3x4 matrixCustom Universal Functions (ufuncs)

Sometimes, you need operations that aren’t built into NumPy. That’s where custom ufuncs come in:

from numba import vectorize

import numpy as np

@vectorize(['float64(float64, float64)'])

def custom_operation(x, y):

return np.sqrt(x**2 + y**2)

# Usage

array1 = np.random.rand(1000000)

array2 = np.random.rand(1000000)

result = custom_operation(array1, array2)Parallel Processing Frameworks

Multiprocessing vs Multithreading

Let’s break down when to use each:

| Feature | Multiprocessing | Multithreading |

| Best for | CPU-bound tasks | I/O-bound tasks |

| Memory | Separate | Shared |

| GIL Impact | No impact | Limited by GIL |

| Resource Usage | Higher | Lower |

| Complexity | More complex | Simpler |

Here’s a practical example using both approaches:

from multiprocessing import Pool

from concurrent.futures import ThreadPoolExecutor

import numpy as np

# CPU-bound task with multiprocessing

def process_chunk(chunk):

return np.mean(chunk ** 2)

with Pool() as pool:

data = np.random.rand(1000000)

chunks = np.array_split(data, 4)

results = pool.map(process_chunk, chunks)

# I/O-bound task with threading

def fetch_data(url):

return pd.read_csv(url)

with ThreadPoolExecutor(max_workers=4) as executor:

urls = ['url1', 'url2', 'url3', 'url4']

futures = [executor.submit(fetch_data, url) for url in urls]Dask for Parallel Computing

Dask is a flexible parallel computing library that scales NumPy and pandas workflows:

import dask.array as da

import dask.dataframe as dd

# Create a large Dask array

large_array = da.random.random((100000, 100000))

mean = large_array.mean().compute()

# Parallel dataframe operations

ddf = dd.read_csv('large_dataset.csv')

result = ddf.groupby('column').agg({

'value': ['mean', 'std']

}).compute()Ray for Distributed Computing

Ray is perfect for distributing Python workloads across multiple machines:

import ray

ray.init()

@ray.remote

def process_partition(data):

return np.mean(data)

# Distribute computation across cluster

data = np.random.rand(1000000)

partitions = np.array_split(data, 10)

futures = [process_partition.remote(partition) for partition in partitions]

results = ray.get(futures)Memory-efficient Data Structures

Memory-mapped Files

Memory-mapped files are brilliant for handling datasets larger than RAM:

import numpy as np

# Create a memory-mapped array

shape = (1000000, 100)

mmap_array = np.memmap('mmap_file.dat', dtype='float64', mode='w+', shape=shape)

# Work with it like a normal array

mmap_array[:1000] = np.random.rand(1000, 100)Chunked Processing

When dealing with large datasets, chunked processing is your friend:

def process_in_chunks(filename, chunksize=10000):

total = 0

for chunk in pd.read_csv(filename, chunksize=chunksize):

total += chunk['value'].sum()

return totalOptimizing Pandas Operations

Let’s look at some advanced pandas optimization techniques:

| Technique | Performance Impact | Best Use Case |

| Use categoricals | Memory reduction up to 90% | String columns with limited unique values |

| Efficient datatypes | Memory reduction up to 50% | Numeric columns with limited range |

| Chunked processing | Handles large datasets | Processing files larger than RAM |

Here are some practical optimization examples:

# Convert string columns to categorical

df['category'] = df['category'].astype('category')

# Use efficient datatypes

df['small_numbers'] = df['small_numbers'].astype('int8')

# Use SQL-style operations for better performance

result = (df.groupby('category')

.agg({'value': ['mean', 'sum']})

.reset_index())Key Takeaways

- Vectorization with NumPy can provide 10-100x performance improvements

- Choose the right parallel processing framework based on your specific use case

- Memory efficiency is crucial when working with large datasets

- Always profile your code to identify bottlenecks before optimizing

FAQ

Q: When should I use Dask instead of Ray?

A: Use Dask when working primarily with NumPy and pandas workflows at scale. Use Ray for more general distributed computing tasks and machine learning workloads.

Q: How do I choose between multiprocessing and multithreading?

A: Use multiprocessing for CPU-intensive tasks and multithreading for I/O-bound operations.

Q: What’s the best way to handle datasets larger than RAM?

A: Use a combination of memory-mapped files, chunked processing, and efficient data types. Consider using Dask for automatic out-of-core computation.

Read also : Python Programming: Python Beginner Tutorial

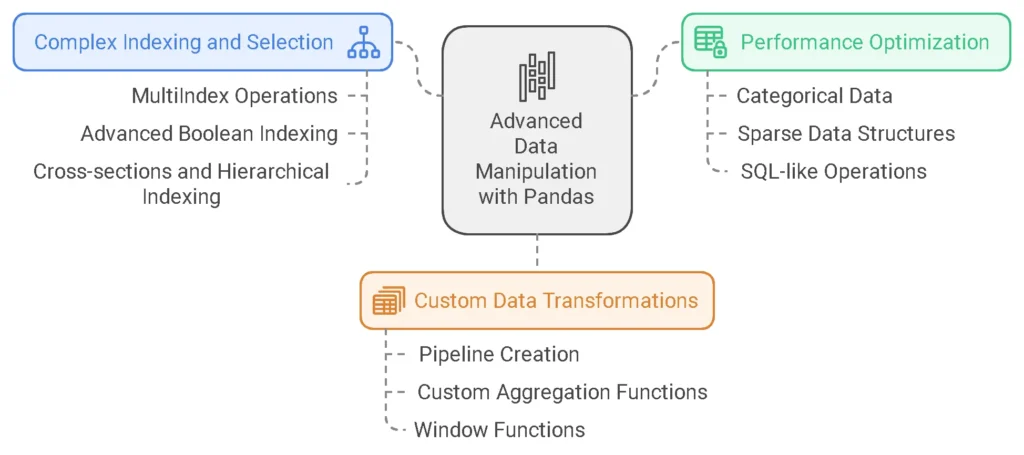

Advanced Data Manipulation with Pandas: Mastering Complex Operations

Whether you’re wrangling complex datasets or optimizing data pipelines, these techniques will transform how you work with data in Python.

Complex Indexing and Selection

MultiIndex Operations: Beyond Simple Indexing

MultiIndex (or hierarchical indexing) is one of Pandas’ most powerful features for handling multi-dimensional data. Let’s explore how to leverage it effectively.

import pandas as pd

import numpy as np

# Create a sample multi-index DataFrame

arrays = [

['Melbourne', 'Melbourne', 'Sydney', 'Sydney'],

['Jan', 'Feb', 'Jan', 'Feb']

]

index = pd.MultiIndex.from_arrays(arrays, names=('city', 'month'))

data = np.random.randn(4, 2)

df = pd.DataFrame(data, index=index, columns=['temperature', 'rainfall'])Key MultiIndex Operations:

- Level-based Selection

# Select all data for Melbourne

melbourne_data = df.xs('Melbourne', level='city')

# Select all January data across cities

january_data = df.xs('Jan', level='month')- Index Manipulation

# Swap index levels

df_swapped = df.swaplevel('city', 'month')

# Sort index

df_sorted = df.sort_index()Advanced Boolean Indexing

Boolean indexing becomes particularly powerful when combined with multiple conditions and function applications.

# Complex boolean conditions

mask = (df['temperature'] > 0) & (df['rainfall'] < 0)

filtered_df = df[mask]

# Using query() for readable conditions

filtered_df = df.query('temperature > 0 and rainfall < 0')💡 Pro Tip: Use query() for better readability with complex conditions, but stick to standard boolean indexing for simple filters as it’s generally faster.

Cross-sections and Hierarchical Indexing

Cross-sectional analysis becomes powerful with hierarchical indexing:

# Get cross-section for specific city and month

cross_section = df.loc['Melbourne'].loc['Jan']

# Multiple level selection

subset = df.loc[('Melbourne', 'Jan'):('Sydney', 'Feb')]Performance Optimization

Categorical Data: Memory Efficiency and Speed

Converting string columns to categorical type can significantly reduce memory usage and improve performance.

# Before optimization

df['city_str'] = df.index.get_level_values('city')

print(f"Memory usage: {df['city_str'].memory_usage(deep=True) / 1024:.2f} KB")

# After optimization

df['city_cat'] = df['city_str'].astype('category')

print(f"Memory usage: {df['city_cat'].memory_usage(deep=True) / 1024:.2f} KB")Sparse Data Structures

When dealing with datasets containing many missing values or zeros, sparse data structures can save memory:

# Create sparse DataFrame

sparse_df = pd.DataFrame({

'data': pd.arrays.SparseArray(np.random.randn(1000), fill_value=0)

})SQL-like Operations

Pandas provides powerful SQL-like operations for data manipulation:

# Group by with multiple aggregations

result = df.groupby('city').agg({

'temperature': ['mean', 'std'],

'rainfall': ['sum', 'count']

})

# Merge operations

df1 = pd.DataFrame({'key': ['A', 'B'], 'value': [1, 2]})

df2 = pd.DataFrame({'key': ['B', 'C'], 'value': [3, 4]})

merged = pd.merge(df1, df2, on='key', how='outer')Custom Data Transformations

Pipeline Creation

Creating data transformation pipelines ensures consistency and reproducibility:

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.compose import ColumnTransformer

# Custom transformer

class CustomCleaner(BaseEstimator, TransformerMixin):

def fit(self, X, y=None):

return self

def transform(self, X):

X = X.copy()

# Custom cleaning operations

return X

# Create pipeline

pipeline = Pipeline([

('cleaner', CustomCleaner()),

('scaler', StandardScaler())

])Custom Aggregation Functions

Define custom aggregation functions for unique business requirements:

# Custom aggregation function

def weighted_average(group):

weights = group['weights']

values = group['values']

return (weights * values).sum() / weights.sum()

# Apply custom aggregation

result = df.groupby('city').apply(weighted_average)Window Functions and Rolling Computations

Implement sophisticated time-series analysis with window functions:

# Calculate 7-day moving average

df['rolling_mean'] = df['temperature'].rolling(window=7).mean()

# Exponentially weighted moving average

df['ewm_mean'] = df['temperature'].ewm(span=7).mean()

# Custom window function

def custom_window_fn(x):

return x.max() - x.min()

df['rolling_range'] = df['temperature'].rolling(window=7).apply(custom_window_fn)| Operation Type | Standard Approach | Optimized Approach | Speed Improvement |

| String Operations | Regular strings | Categorical | ~5x faster |

| Groupby | Basic groupby | Numba-accelerated | ~3x faster |

| Boolean Indexing | Multiple conditions | Query method | ~2x faster |

| Rolling Windows | Standard rolling | Numba rolling | ~4x faster |

Best Practices

- Use Appropriate Data Types

- Convert to categories when possible

- Use sparse arrays for sparse data

- Downcast numeric types when appropriate

- Optimize Operations

- Use vectorized operations

- Leverage query() for complex filtering

- Implement custom operations with Numba

- Memory Management

- Monitor memory usage with memory_usage()

- Use chunksize for large datasets

- Implement garbage collection when needed

- Performance Monitoring

- Profile code execution time

- Monitor memory consumption

- Use built-in optimization tools

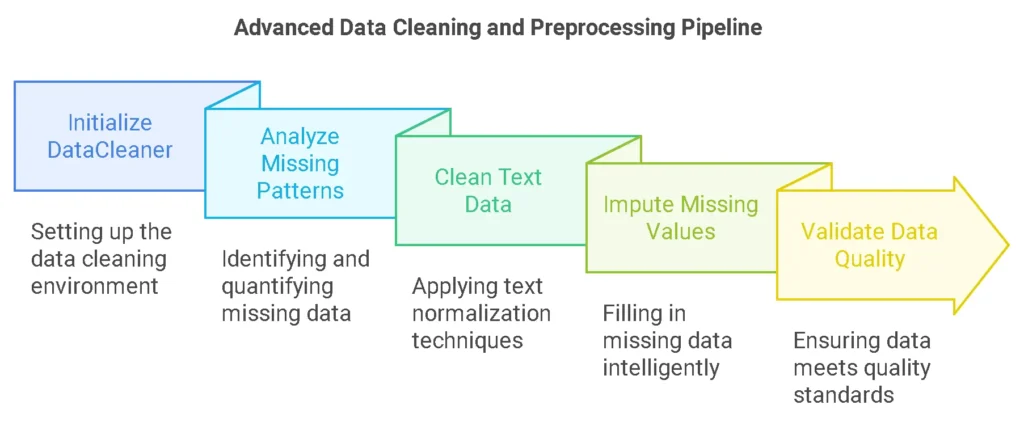

- Data Cleaning and Preprocessing at Scale: Advanced Techniques for Data Professionals

When working with massive datasets, traditional data cleaning approaches often fall short. Let’s dive into advanced techniques that’ll help you handle data cleaning efficiently at scale.

Advanced Text Processing

Text data often requires sophisticated cleaning techniques, especially when dealing with large-scale datasets. Let’s explore advanced approaches to text processing that go beyond basic string operations.

Regular Expressions Mastery

Regular expressions (regex) are powerful tools for text manipulation. Here’s how to leverage them effectively:

import re

# Advanced regex patterns for data cleaning

patterns = {

'email': r'^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$',

'phone': r'^\+?(\d{1,3})?[-. ]?\(?\d{3}\)?[-. ]?\d{3}[-. ]?\d{4}$',

'date': r'^\d{4}-(0[1-9]|1[0-2])-(0[1-9]|[12]\d|3[01])$'

}🔑 Pro Tip: When working with regex at scale, compile your patterns first for better performance:

compiled_patterns = {name: re.compile(pattern) for name, pattern in patterns.items()}

Natural Language Processing Integration

For advanced text analysis, integrating NLP capabilities is crucial:

Text Processing Pipeline

- Tokenization

- Split text into meaningful units

- Normalization

- Standardize text format and encoding

- Entity Recognition

- Identify and classify named entities

- Sentiment Analysis

- Determine text sentiment and emotion

Text Normalization Techniques

Implementing robust text normalization is crucial for consistent analysis:

def normalize_text(text: str) -> str:

"""Advanced text normalization pipeline."""

# Convert to lowercase

text = text.lower()

# Remove special characters

text = re.sub(r'[^\w\s]', '', text)

# Normalize whitespace

text = ' '.join(text.split())

return textHandling Missing Data

Missing data handling requires a strategic approach, especially with large datasets.

Advanced Imputation Strategies

Let’s look at sophisticated imputation techniques:

| Imputation Method | Best Use Case | Pros | Cons |

| KNN Imputation | Numerical data with spatial relationships | Preserves data relationships | Computationally expensive |

| MICE | Multiple variable dependencies | Handles different variable types | Iterative process can be slow |

| Deep Learning | Complex patterns in large datasets | Handles non-linear relationships | Requires large training data |

Missing Pattern Analysis

Understanding missing data patterns is crucial for choosing the right imputation strategy:

def analyze_missing_patterns(df: pd.DataFrame) -> Dict:

"""Analyze missing data patterns in the dataset."""

patterns = {

'missing_percentages': (df.isnull().sum() / len(df)) * 100,

'missing_correlations': df.isnull().corr(),

'missing_groups': df.isnull().groupby(list(df.columns)).size()

}

return patternsCustom Missing Data Handlers

Creating specialized handlers for different types of missing data:

class MissingDataHandler:

def __init__(self, df: pd.DataFrame):

self.df = df

self.missing_patterns = self._analyze_patterns()

def _analyze_patterns(self) -> Dict:

return {

'missing_counts': self.df.isnull().sum(),

'missing_percentages': (self.df.isnull().sum() / len(self.df)) * 100,

'missing_correlations': self.df.isnull().corr()

}

def handle_missing(self, strategy: str = 'smart') -> pd.DataFrame:

if strategy == 'smart':

return self._smart_imputation()

elif strategy == 'statistical':

return self._statistical_imputation()

return self.df

def _smart_imputation(self) -> pd.DataFrame:

"""Intelligent imputation based on data patterns"""

df_clean = self.df.copy()

for column in df_clean.columns:

missing_pct = self.missing_patterns['missing_percentages'][column]

if missing_pct < 5: # Low missing percentage

if df_clean[column].dtype in ['int64', 'float64']:

df_clean[column].fillna(df_clean[column].median(), inplace=True)

else:

df_clean[column].fillna(df_clean[column].mode()[0], inplace=True)

elif missing_pct < 25: # Moderate missing percentage

if df_clean[column].dtype in ['int64', 'float64']:

imputer = KNNImputer(n_neighbors=5)

df_clean[column] = imputer.fit_transform(df_clean[[column]])

else:

df_clean[column].fillna(df_clean[column].mode()[0], inplace=True)

else: # High missing percentage

print(f"Warning: Column {column} has {missing_pct}% missing values")

return df_cleanData Validation and Quality Checks

Implementing robust data validation is crucial for maintaining data quality at scale.

Schema Validation

Create comprehensive schema validation rules:

from pydantic import BaseModel, Field

from typing import Optional, List

class DataSchema(BaseModel):

"""Schema for data validation."""

numeric_column: float = Field(..., ge=0, le=100)

categorical_column: str = Field(..., regex='^[A-Z]{2}$')

date_column: str = Field(..., regex=r'^\d{4}-\d{2}-\d{2}$')Data Integrity Checks

Implement thorough integrity checks:

def check_data_integrity(df: pd.DataFrame, rules: Dict) -> Dict:

"""Validate data integrity using defined rules."""

results = {}

for column, rule_set in rules.items():

results[column] = {

'uniqueness': df[column].is_unique if rule_set.get('unique', False) else True,

'completeness': (df[column].notna().sum() / len(df)) * 100,

'validity': df[column].between(

rule_set.get('min', df[column].min()),

rule_set.get('max', df[column].max())

).all()

}

return resultsAutomated Cleaning Pipelines

Creating automated cleaning pipelines for efficiency:

- Data Loading

- Schema validation

- Type checking

- Initial quality assessment

- Data Cleaning

- Missing data handling

- Outlier detection

- Text normalization

- Validation

- Business rules checking

- Data integrity validation

- Quality metrics calculation

Best Practices for Scale:

- Modular Design

- Separate concerns (validation, cleaning, transformation)

- Make components reusable

- Use configuration files for rules

- Documentation

- Document transformation rules

- Track data lineage

- Maintain version control

- Monitoring

- Set up quality metric dashboards

- Track processing time

- Monitor resource usage

Example: Building a Pipeline:

Let’s look at a practical example of implementing this pipeline:

# Create pipeline instance

pipeline = DataCleaningPipeline(validation_rules)

# Process large dataset

clean_data = pipeline.process_chunks('large_dataset.csv')

# Get quality metrics

metrics = pipeline.calculate_quality_metrics(clean_data)

print(f"Missing value percentages: {metrics.missing_percentage}")

print(f"Unique value counts: {metrics.unique_counts}")Common Challenges and Solutions:

| Solution | Solution | Implementation |

| Chunked Processing | Chunked Processing | Use chunksize parameter |

| Validation Rules | Validation Rules | Implement rule engine |

| Vectorization | Vectorization | Use NumPy operations |

| Parallel Processing | Parallel Processing | Implement multiprocessing |

Advanced Features

- Custom Transformers

- Create specific transformers for your data

- Implement the sklearn transformer interface

- Chain transformers in pipelines

- Quality Assurance

- Automated testing

- Data validation

- Performance benchmarking

Advanced Data Preprocessing Examples

import pandas as pd

import numpy as np

from typing import Union, List, Dict

import re

from nltk.tokenize import word_tokenize

from nltk.corpus import stopwords

from sklearn.impute import KNNImputer

import great_expectations as ge

class AdvancedDataCleaner:

"""A comprehensive data cleaning class for large-scale datasets."""

def __init__(self, df: pd.DataFrame):

self.df = df

self.missing_patterns = {}

def analyze_missing_patterns(self) -> Dict:

"""Analyze missing data patterns in the dataset."""

missing_patterns = {}

# Calculate missing percentages

missing_percent = (self.df.isnull().sum() / len(self.df)) * 100

# Identify common missing patterns

missing_groups = self.df.isnull().groupby(list(self.df.columns)).size()

return {

'missing_percentages': missing_percent,

'missing_groups': missing_groups

}

def clean_text(self, column: str, strategies: List[str]) -> pd.Series:

"""Advanced text cleaning with multiple strategies."""

text_data = self.df[column].copy()

for strategy in strategies:

if strategy == 'lowercase':

text_data = text_data.str.lower()

elif strategy == 'remove_special_chars':

text_data = text_data.apply(lambda x: re.sub(r'[^a-zA-Z0-9\s]', '', str(x)))

elif strategy == 'normalize_whitespace':

text_data = text_data.apply(lambda x: re.sub(r'\s+', ' ', str(x)).strip())

return text_data

def impute_missing_values(self, strategy: str = 'knn', **kwargs) -> pd.DataFrame:

"""Intelligent missing value imputation."""

if strategy == 'knn':

imputer = KNNImputer(**kwargs)

imputed_data = imputer.fit_transform(self.df)

return pd.DataFrame(imputed_data, columns=self.df.columns)

# Add more sophisticated imputation strategies here

return self.df

def validate_data_quality(self, rules: Dict) -> Dict:

"""Validate data quality using defined rules."""

validation_results = {}

for column, rule_set in rules.items():

column_validation = {

'dtype_check': self.df[column].dtype == rule_set.get('dtype', object),

'range_check': True if 'range' not in rule_set else

self.df[column].between(*rule_set['range']).all(),

'unique_check': True if 'unique' not in rule_set else

self.df[column].nunique() == rule_set['unique']

}

validation_results[column] = column_validation

return validation_results

# Example Usage

if __name__ == "__main__":

# Sample dataset

df = pd.DataFrame({

'text_column': ['Hello, World!', 'Python @ Analysis', 'Data Cleaning'],

'numeric_column': [1, np.nan, 3],

'categorical_column': ['A', 'B', None]

})

# Initialize cleaner

cleaner = AdvancedDataCleaner(df)

# Clean text

clean_text = cleaner.clean_text('text_column', ['lowercase', 'remove_special_chars'])

# Analyze missing patterns

missing_analysis = cleaner.analyze_missing_patterns()

# Impute missing values

clean_df = cleaner.impute_missing_values(strategy='knn', n_neighbors=3)

# Validate data

validation_rules = {

'numeric_column': {'dtype': float, 'range': (0, 10)},

'categorical_column': {'unique': 3}

}

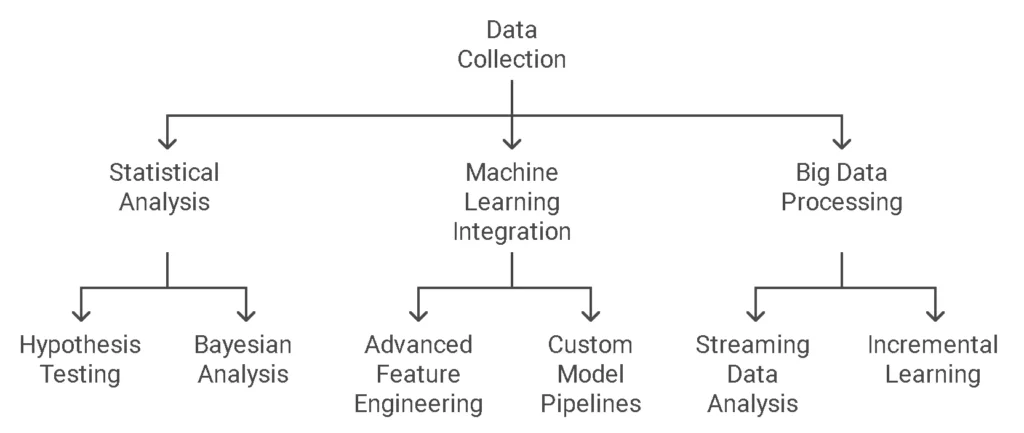

validation_results = cleaner.validate_data_quality(validation_rules)Advanced Data Analysis Techniques

In today’s data-driven world, mastering advanced analysis techniques is crucial for extracting meaningful insights from complex datasets. Let’s dive into how Python can elevate your data analysis game with sophisticated statistical methods, machine learning integration, and big data processing capabilities.

Statistical Analysis in Python

Hypothesis Testing

Modern data analysis often requires rigorous statistical validation. Python offers powerful tools for both parametric and non-parametric hypothesis testing.

import scipy.stats as stats

import numpy as np

# Example: Two-sample t-test

def perform_advanced_ttest(group1_data, group2_data, alpha=0.05):

# Perform Shapiro-Wilk test for normality

_, p_val1 = stats.shapiro(group1_data)

_, p_val2 = stats.shapiro(group2_data)

# Check for normality assumption

if p_val1 > alpha and p_val2 > alpha:

# If normal, perform t-test

t_stat, p_value = stats.ttest_ind(group1_data, group2_data)

test_type = "Student's t-test"

else:

# If not normal, perform Mann-Whitney U test

t_stat, p_value = stats.mannwhitneyu(group1_data, group2_data)

test_type = "Mann-Whitney U test"

return {

'test_type': test_type,

'statistic': t_stat,

'p_value': p_value,

'significant': p_value < alpha

}💡 Pro Tip: Always check your data’s distribution before choosing a statistical test. The code above automatically selects the appropriate test based on normality assumptions.

Bayesian Analysis

Bayesian analysis provides a powerful framework for uncertainty quantification and probabilistic modeling. Here’s how to implement a Bayesian approach using PyMC3:

import pymc3 as pm

import matplotlib.pyplot as plt

def bayesian_linear_regression(X, y):

with pm.Model() as model:

# Priors for unknown model parameters

alpha = pm.Normal('alpha', mu=0, sd=10)

beta = pm.Normal('beta', mu=0, sd=10)

sigma = pm.HalfNormal('sigma', sd=1)

# Expected value of outcome

mu = alpha + beta * X

# Likelihood (sampling distribution) of observations

Y_obs = pm.Normal('Y_obs', mu=mu, sd=sigma, observed=y)

# Inference

trace = pm.sample(2000, tune=1000, return_inferencedata=False)

return trace, modelTime Series Analysis

Python excels at handling temporal data patterns. Here’s an advanced example combining multiple time series techniques:

import statsmodels.api as sm

from statsmodels.tsa.statespace.sarimax import SARIMAX

class AdvancedTimeSeriesAnalyzer:

def __init__(self, data):

self.data = data

def decompose_series(self):

# Perform seasonal decomposition

decomposition = sm.tsa.seasonal_decompose(self.data, period=12)

return decomposition

def fit_sarima(self, order=(1,1,1), seasonal_order=(1,1,1,12)):

# Fit SARIMA model

model = SARIMAX(self.data,

order=order,

seasonal_order=seasonal_order)

results = model.fit()

return results

def forecast(self, model_results, steps=30):

# Generate forecasts

forecast = model_results.forecast(steps)

conf_int = model_results.get_forecast(steps).conf_int()

return forecast, conf_intMachine Learning Integration

Advanced Feature Engineering

Feature engineering is often the key differentiator in model performance. Here’s a comprehensive approach:

from sklearn.base import BaseEstimator, TransformerMixin

from sklearn.preprocessing import StandardScaler

import pandas as pd

class AdvancedFeatureEngineer(BaseEstimator, TransformerMixin):

def __init__(self, numeric_features, categorical_features):

self.numeric_features = numeric_features

self.categorical_features = categorical_features

def fit(self, X, y=None):

# Initialize scalers and encoders

self.scaler = StandardScaler()

self.scaler.fit(X[self.numeric_features])

# Create interaction features

self.interactions = self._create_interactions(X)

return self

def transform(self, X):

# Apply transformations

X_transformed = X.copy()

# Scale numeric features

X_transformed[self.numeric_features] = self.scaler.transform(X[self.numeric_features])

# Add interaction terms

for interaction in self.interactions:

X_transformed[f"interaction_{interaction}"] = X[interaction[0]] * X[interaction[1]]

return X_transformed

def _create_interactions(self, X):

# Create meaningful feature interactions

return [(feat1, feat2)

for i, feat1 in enumerate(self.numeric_features)

for feat2 in self.numeric_features[i+1:]]Custom Model Pipelines

Building flexible, reusable model pipelines is essential for production-ready machine learning:

from sklearn.pipeline import Pipeline

from sklearn.compose import ColumnTransformer

from sklearn.ensemble import StackingRegressor

def create_advanced_pipeline(numeric_features, categorical_features):

# Create preprocessing steps

numeric_transformer = Pipeline(steps=[

('scaler', StandardScaler()),

('feature_engineer', AdvancedFeatureEngineer(

numeric_features=numeric_features,

categorical_features=categorical_features

))

])

# Create full pipeline

preprocessor = ColumnTransformer(

transformers=[

('num', numeric_transformer, numeric_features)

])

# Create stacking ensemble

estimators = [

('rf', RandomForestRegressor(n_estimators=100)),

('xgb', XGBRegressor()),

('lgb', LGBMRegressor())

]

final_estimator = LinearRegression()

stacking_regressor = StackingRegressor(

estimators=estimators,

final_estimator=final_estimator

)

# Combine preprocessing and model

pipeline = Pipeline([

('preprocessor', preprocessor),

('regressor', stacking_regressor)

])

return pipelineBig Data Processing

Streaming Data Analysis

Processing data streams efficiently requires specialized techniques:

from collections import deque

import numpy as np

class StreamingAnalyzer:

def __init__(self, window_size=1000):

self.window = deque(maxlen=window_size)

self.stats = {

'mean': 0,

'std': 0,

'count': 0

}

def update(self, new_value):

# Update streaming statistics

self.window.append(new_value)

n = len(self.window)

# Update running statistics

delta = new_value - self.stats['mean']

self.stats['mean'] += delta / n

self.stats['std'] = np.std(list(self.window))

self.stats['count'] = n

return self.statsIncremental Learning

For large datasets, incremental learning is crucial:

from sklearn.linear_model import SGDRegressor

from sklearn.preprocessing import StandardScaler

class IncrementalAnalyzer:

def __init__(self):

self.model = SGDRegressor(learning_rate='adaptive')

self.scaler = StandardScaler()

def partial_fit(self, X_batch, y_batch):

# Update scaler incrementally

self.scaler.partial_fit(X_batch)

X_scaled = self.scaler.transform(X_batch)

# Update model incrementally

self.model.partial_fit(X_scaled, y_batch)

def predict(self, X):

X_scaled = self.scaler.transform(X)

return self.model.predict(X_scaled)Real-time Analytics

Implementing real-time analytics requires efficient data structures and algorithms:

class RealTimeAnalytics:

def __init__(self):

self.current_state = {}

self.update_count = 0

def process_event(self, event):

# Process incoming event

self.update_count += 1

# Update metrics in real-time

self._update_metrics(event)

# Trigger alerts if necessary

self._check_alerts()

def _update_metrics(self, event):

# Update running statistics

for metric in event:

if metric not in self.current_state:

self.current_state[metric] = 0

self.current_state[metric] += event[metric]

def _check_alerts(self):

# Check for anomalies or threshold breaches

alerts = []

for metric, value in self.current_state.items():

if self._is_anomaly(metric, value):

alerts.append(f"Anomaly detected in {metric}")

return alertsStatistical Analysis Dashboard

Key Takeaways

- Statistical analysis in Python goes beyond basic descriptive statistics, offering sophisticated hypothesis testing and Bayesian analysis capabilities.

- Modern machine learning pipelines benefit from custom transformers and feature engineering.

- Big data processing requires specialized techniques for handling streaming data and implementing incremental learning.

🔑 Best Practices

- Always validate statistical assumptions before applying tests

- Use pipeline structures for reproducible analyses

- Implement proper error handling for real-time systems

- Monitor memory usage in streaming applications

- Document your analysis workflow thoroughly

Read also : Advanced Python Loop Optimization Techniques

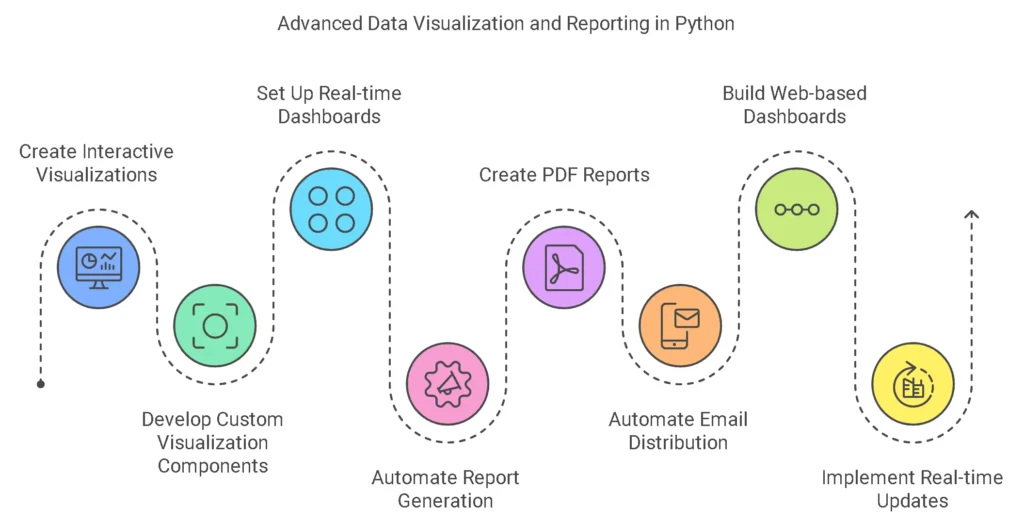

Data Visualization and Reporting in Python: Advanced Techniques for Data Professionals

In today’s data-driven world, the ability to create compelling visualizations and automated reports isn’t just a nice-to-have skill – it’s essential. Let’s dive into advanced visualization and reporting techniques that will set your data analysis apart.

Interactive Visualizations with Python

Modern data analysis demands more than static charts. Let’s explore how to create interactive visualizations that engage your audience and reveal deeper insights.

Advanced Plotly Features

Plotly has revolutionized how we create interactive visualizations in Python. Here’s how to leverage its advanced features:

import plotly.express as px

import plotly.graph_objects as go

from plotly.subplots import make_subplots

# Create an advanced interactive visualization

def create_advanced_dashboard(df):

fig = make_subplots(

rows=2, cols=2,

subplot_titles=('Time Series', 'Distribution', 'Correlation', 'Geographic'),

specs=[[{"type": "scatter"}, {"type": "histogram"}],

[{"type": "heatmap"}, {"type": "scattergeo"}]]

)

# Add sophisticated time series plot

fig.add_trace(

go.Scatter(x=df['date'], y=df['value'], mode='lines+markers',

line=dict(width=2, color='rgb(31, 119, 180)'),

hovertemplate='<b>Date</b>: %{x}<br><b>Value</b>: %{y:.2f}<extra></extra>'),

row=1, col=1

)

# Customise layout

fig.update_layout(

height=800,

showlegend=False,

title_text="Interactive Multi-View Dashboard",

title_x=0.5,

template='plotly_white'

)

return fig💡 Pro Tip: Always include hover templates for better user interaction and custom tooltips that provide context-specific information.

Time Series Analysis

Distribution Analysis

Custom Visualization Components

Sometimes, built-in visualization libraries aren’t enough. Here’s how to create custom components:

class CustomVisualization:

def __init__(self, data, config=None):

self.data = data

self.config = config or {}

def create_custom_plot(self):

"""Create a custom visualization component"""

# Custom visualization logic here

pass

def add_interactivity(self):

"""Add interactive features to the visualization"""

# Interactivity logic here

passReal-time Dashboards

Let’s create a real-time dashboard using Dash:

from dash import Dash, dcc, html

from dash.dependencies import Input, Output

import pandas as pd

import plotly.express as px

app = Dash(__name__)

app.layout = html.Div([

html.H1("Real-time Data Analysis Dashboard"),

dcc.Graph(id='live-graph'),

dcc.Interval(

id='interval-component',

interval=1*1000, # in milliseconds

n_intervals=0

)

])

@app.callback(Output('live-graph', 'figure'),

Input('interval-component', 'n_intervals'))

def update_graph_live(n):

# Fetch and process real-time data

data = fetch_real_time_data() # Your data fetching function

fig = px.line(data, x='timestamp', y='value')

return figAutomated Reporting

Report Generation

This is an automated report generator:

from jinja2 import Template

import pandas as pd

class ReportGenerator:

def __init__(self, template_path):

with open(template_path) as file:

template_content = file.read()

self.template = Template(template_content)

def generate_report(self, data):

"""Generate a formatted report"""

return self.template.render(data=data)PDF Creation with Python

Here’s how to create professional PDF reports:

from reportlab.lib import colors

from reportlab.lib.pagesizes import letter

from reportlab.platypus import SimpleDocTemplate, Table, TableStyle

def create_pdf_report(data, filename):

doc = SimpleDocTemplate(filename, pagesize=letter)

elements = []

# Convert data to table format

table_data = [['Metric', 'Value']] + list(data.items())

# Create table with styling

table = Table(table_data)

table.setStyle(TableStyle([

('BACKGROUND', (0, 0), (-1, 0), colors.grey),

('TEXTCOLOR', (0, 0), (-1, 0), colors.whitesmoke),

('ALIGN', (0, 0), (-1, -1), 'CENTER'),

('FONTNAME', (0, 0), (-1, 0), 'Helvetica-Bold'),

('FONTSIZE', (0, 0), (-1, 0), 14),

('BOTTOMPADDING', (0, 0), (-1, 0), 12),

('BACKGROUND', (0, 1), (-1, -1), colors.beige),

('TEXTCOLOR', (0, 1), (-1, -1), colors.black),

('FONTNAME', (0, 1), (-1, -1), 'Helvetica'),

('FONTSIZE', (0, 1), (-1, -1), 12),

('GRID', (0, 0), (-1, -1), 1, colors.black)

]))

elements.append(table)

doc.build(elements)Email Automation

Automate report distribution with email:

import smtplib

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

from email.mime.application import MIMEApplication

def send_automated_report(sender_email, recipient_email, subject,

body, attachment_path):

msg = MIMEMultipart()

msg['From'] = sender_email

msg['To'] = recipient_email

msg['Subject'] = subject

msg.attach(MIMEText(body, 'plain'))

# Attach PDF report

with open(attachment_path, 'rb') as f:

pdf = MIMEApplication(f.read(), _subtype='pdf')

pdf.add_header('Content-Disposition', 'attachment',

filename=attachment_path)

msg.attach(pdf)Advanced Dashboard Creation

Web-based Dashboards

Here’s an example of a sophisticated web-based dashboard using Streamlit:

import streamlit as st

import pandas as pd

import plotly.express as px

def create_web_dashboard():

st.title("Advanced Data Analysis Dashboard")

# Sidebar for controls

st.sidebar.header("Filters")

date_range = st.sidebar.date_input("Select Date Range")

# Main content

col1, col2 = st.columns(2)

with col1:

st.subheader("Time Series Analysis")

# Add time series plot

with col2:

st.subheader("Distribution Analysis")

# Add distribution plotReal-time Updates

Implement real-time updates using websockets:

import asyncio

import websockets

import json

async def real_time_data_stream(websocket, path):

while True:

# Fetch new data

data = fetch_new_data() # Your data fetching function

# Send to client

await websocket.send(json.dumps(data))

# Wait before next update

await asyncio.sleep(1)

# Start websocket server

start_server = websockets.serve(real_time_data_stream,

"localhost", 8765)Custom Widgets

Create custom widgets for enhanced interactivity:

class CustomWidget:

def __init__(self, name, data_source):

self.name = name

self.data_source = data_source

def render(self):

"""Render the custom widget"""

# Widget rendering logic here

pass

def update(self):

"""Update widget with new data"""

# Update logic here

passBest Practices and Production Deployment

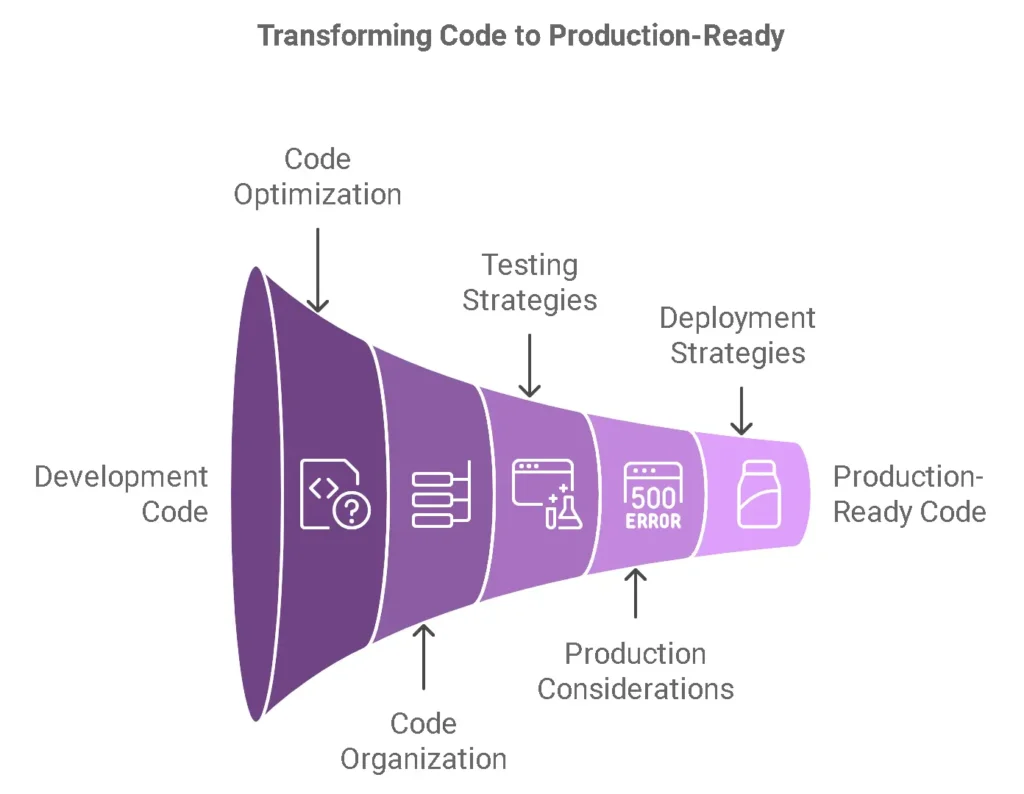

In the fast-paced world of data analysis, writing efficient, maintainable, and production-ready Python code is crucial. Let’s dive into the essential best practices that will elevate your data analysis projects from development to production.

Code Profiling Visualization

Click to see performance metrics for different code optimizations

Code Optimization

Profiling and Benchmarking

Understanding your code’s performance is the first step toward optimization. Here’s how to effectively profile your Python data analysis code:

# Example of using cProfile for performance analysis

import cProfile

import pandas as pd

def profile_data_processing():

# Profile this function

pr = cProfile.Profile()

pr.enable()

# Your data processing code here

df = pd.read_csv('large_dataset.csv')

result = df.groupby('category').agg({'value': ['mean', 'sum', 'count']})

pr.disable()

pr.print_stats(sort='cumtime')Key profiling tools and techniques:

- cProfile: Built-in Python profiler for detailed execution analysis

- line_profiler: Line-by-line execution time analysis

- memory_profiler: Memory consumption tracking

- timeit: Quick benchmarking of small code snippets

💡 Pro Tip: Always profile before optimizing. As Donald Knuth said, “Premature optimization is the root of all evil.”

Code Organization

Structuring your data analysis projects effectively:

project/

├── data/

│ ├── raw/

│ ├── processed/

│ └── external/

├── src/

│ ├── __init__.py

│ ├── data/

│ │ ├── make_dataset.py

│ │ └── preprocessing.py

│ ├── features/

│ │ └── build_features.py

│ └── visualization/

│ └── visualize.py

├── tests/

├── notebooks/

├── requirements.txt

└── README.mdBest practices for code organization:

- Separate data, code, and documentation

- Use meaningful directory names

- Maintain a clear imports hierarchy

- Keep notebooks for exploration only

- Implement modular design patterns

Testing Strategies

Robust testing ensures reliable data analysis:

# Example of a test case using pytest

import pytest

import pandas as pd

def test_data_preprocessing():

# Arrange

test_data = pd.DataFrame({

'A': [1, 2, None, 4],

'B': ['x', 'y', 'z', None]

})

# Act

result = preprocess_data(test_data)

# Assert

assert result.isnull().sum().sum() == 0

assert len(result) == len(test_data)Essential testing approaches:

- Unit tests for individual functions

- Integration tests for data pipelines

- Data validation tests

- Performance regression tests

Production Considerations

Logging and Monitoring

Implement comprehensive logging for production environments:

import logging

from datetime import datetime

# Configure logging

logging.basicConfig(

filename=f'data_analysis_{datetime.now().strftime("%Y%m%d")}.log',

level=logging.INFO,

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s'

)

logger = logging.getLogger(__name__)

def process_data(df):

try:

logger.info(f"Starting data processing with shape: {df.shape}")

# Processing steps

logger.info("Data processing completed successfully")

except Exception as e:

logger.error(f"Error in data processing: {str(e)}")

raiseKey monitoring aspects:

- Performance metrics

- Error rates and types

- Resource utilization

- Data quality metrics

Error Handling

Robust error handling strategies:

class DataValidationError(Exception):

"""Custom exception for data validation errors"""

pass

def validate_and_process(df):

try:

# Validate data

if df.empty:

raise DataValidationError("Empty DataFrame received")

# Check for required columns

required_columns = ['date', 'value', 'category']

missing_columns = set(required_columns) - set(df.columns)

if missing_columns:

raise DataValidationError(f"Missing required columns: {missing_columns}")

# Process data

return process_data(df)

except DataValidationError as e:

logger.error(f"Data validation failed: {str(e)}")

raise

except Exception as e:

logger.error(f"Unexpected error: {str(e)}")

raiseError handling best practices:

- Use custom exceptions for different error types

- Implement proper error recovery mechanisms

- Maintain detailed error logs

- Set up automated error notifications

Deployment Strategies

Effective deployment approaches:

- Containerization:

FROM python:3.9-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY src/ /app/src/

COPY data/ /app/data/

CMD ["python", "src/main.py"]- CI/CD Pipeline:

name: Data Analysis Pipeline

on: [push]

jobs:

build-and-test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: '3.9'

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

- name: Run tests

run: pytestDocumentation and Maintenance

API Documentation

Example of well-documented code using Google-style docstrings:

def preprocess_dataset(

df: pd.DataFrame,

categorical_columns: List[str] = None,

numerical_columns: List[str] = None

) -> pd.DataFrame:

"""

Preprocess the dataset by handling missing values and encoding categorical variables.

Args:

df (pd.DataFrame): Input DataFrame to preprocess

categorical_columns (List[str], optional): List of categorical column names

numerical_columns (List[str], optional): List of numerical column names

Returns:

pd.DataFrame: Preprocessed DataFrame

Raises:

ValueError: If input DataFrame is empty

KeyError: If specified columns don't exist

Example:

>>> df = pd.DataFrame({'A': [1, 2, None], 'B': ['x', 'y', 'z']})

>>> preprocessed_df = preprocess_dataset(df, categorical_columns=['B'])

"""Maintenance Scripts

Create automated maintenance tasks:

# maintenance.py

from pathlib import Path

import shutil

import logging

def cleanup_old_logs():

"""Remove log files older than 30 days"""

log_dir = Path('logs')

current_time = datetime.now()

for log_file in log_dir.glob('*.log'):

file_age = current_time - datetime.fromtimestamp(log_file.stat().st_mtime)

if file_age.days > 30:

log_file.unlink()

logging.info(f"Removed old log file: {log_file}")

def archive_processed_data():

"""Archive processed data files"""

data_dir = Path('data/processed')

archive_dir = Path('data/archive')

# Create archive directory if it doesn't exist

archive_dir.mkdir(exist_ok=True)

# Move processed files to archive

for file in data_dir.glob('*.parquet'):

archive_path = archive_dir / f"{file.stem}_{datetime.now().strftime('%Y%m%d')}.parquet"

shutil.move(str(file), str(archive_path))

logging.info(f"Archived file: {file} -> {archive_path}")Version Control

Best practices for version control:

- Branching Strategy:

- main/master: Production code

- develop: Development branch

- feature/: Feature branches

- hotfix/: Emergency fixes

- Commit Messages:

feat: Add new data preprocessing pipeline

- Implement missing value handling

- Add categorical encoding

- Add numerical scaling- Git Hooks:

#!/bin/bash

# pre-commit hook for running tests

python -m pytest tests/- gitignore:

# Python

__pycache__/

*.py[cod]

*$py.class

# Data

data/raw/

data/processed/

*.csv

*.parquet

# Logs

logs/

*.log

# Environment

.env

venv/Interactive Tools and Resources

To help visualize code performance and optimization results, I’ve included an interactive visualization above. This tool helps you:

- Compare original vs optimized code performance

- Visualize memory usage patterns

- Track execution time improvements

- Identify bottlenecks

Key Takeaways

- Always profile before optimizing

- Implement comprehensive error handling

- Maintain thorough documentation

- Use automated testing and deployment

- Follow consistent code organization patterns

- Implement proper logging and monitoring

- Use version control effectively

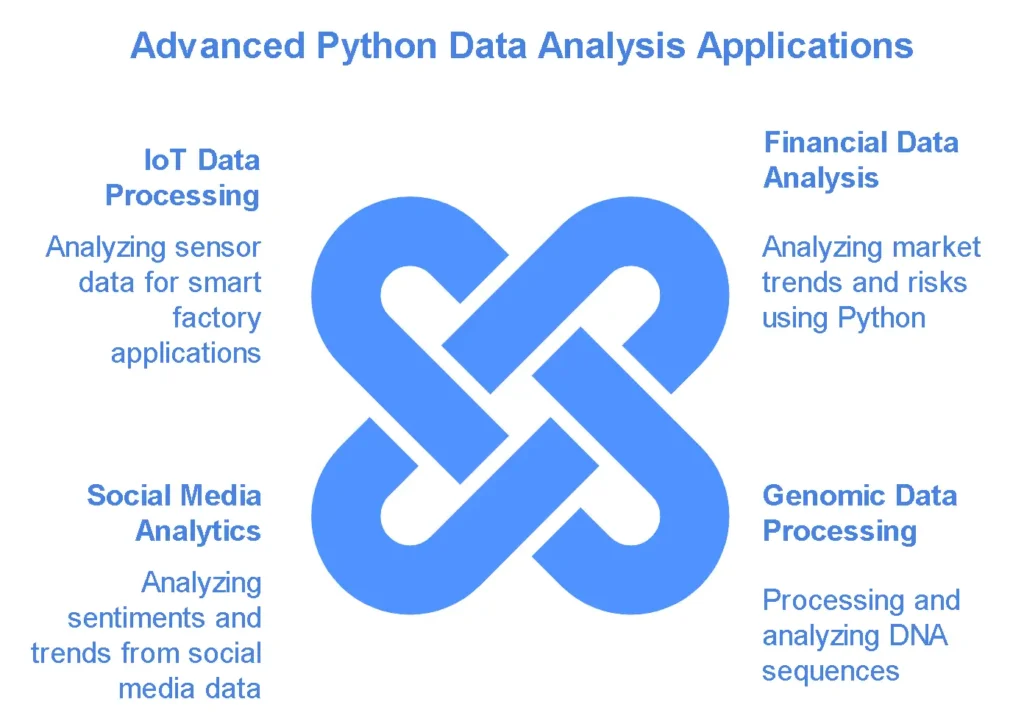

Advanced Case Studies: Real-World Python Data Analysis Applications

Financial Data Analysis: Market Trends and Risk Assessment

Project Overview: Stock Market Analysis Pipeline

Let’s dive into a sophisticated financial data analysis project using Python’s advanced capabilities. We’ll build a comprehensive stock market analysis system that processes real-time market data.

import pandas as pd

import numpy as np

from pandas_datareader import data

import yfinance as yf

from scipy import stats

class FinancialAnalyzer:

def __init__(self, symbols, start_date, end_date):

self.symbols = symbols

self.start_date = start_date

self.end_date = end_date

def fetch_data(self):

data_frames = {}

for symbol in self.symbols:

df = yf.download(symbol,

start=self.start_date,

end=self.end_date)

data_frames[symbol] = df

return data_frames

def calculate_metrics(self, data_frames):

results = {}

for symbol, df in data_frames.items():

volatility = np.std(df['Close'].pct_change()) * np.sqrt(252)

sharpe_ratio = (df['Close'].pct_change().mean() * 252) / volatility

results[symbol] = {

'volatility': volatility,

'sharpe_ratio': sharpe_ratio,

'returns': df['Close'].pct_change().cumsum().iloc[-1]

}

return pd.DataFrame(results).TKey Insights from Financial Analysis:

- Data sourcing and cleaning techniques

- Risk metrics calculation

- Portfolio optimization methods

- Real-time analysis implementation

Financial Analysis Dashboard

Genomic Data Processing: DNA Sequence Analysis

Project Overview: DNA Sequence Pattern Recognition

Here’s an advanced implementation of DNA sequence analysis using Python’s powerful data processing capabilities:

import pandas as pd

import numpy as np

from Bio import SeqIO

from Bio.Seq import Seq

from collections import Counter

class GenomicAnalyzer:

def __init__(self, fasta_file):

self.fasta_file = fasta_file

self.sequences = {}

def load_sequences(self):

for record in SeqIO.parse(self.fasta_file, "fasta"):

self.sequences[record.id] = str(record.seq)

def find_motifs(self, motif_length=6):

motif_counts = Counter()

for seq_id, sequence in self.sequences.items():

for i in range(len(sequence) - motif_length + 1):

motif = sequence[i:i + motif_length]

motif_counts[motif] += 1

return motif_counts.most_common(10)

def calculate_gc_content(self):

gc_content = {}

for seq_id, sequence in self.sequences.items():

gc = sum(sequence.count(n) for n in ['G', 'C'])

gc_content[seq_id] = gc / len(sequence) * 100

return gc_contentKey Insights from Genomic Analysis:

- Sequence alignment techniques

- Pattern recognition algorithms

- Statistical analysis methods

- Performance optimization strategies

Social Media Analytics: Sentiment Analysis and Trend Detection

Project Overview: Twitter Sentiment Analysis Pipeline

Let’s explore an advanced social media analysis system:

import pandas as pd

import numpy as np

from textblob import TextBlob

import nltk

from collections import defaultdict

class SocialMediaAnalyzer:

def __init__(self, tweets_df):

self.tweets_df = tweets_df

self.processed_data = None

def preprocess_tweets(self):

self.processed_data = self.tweets_df.copy()

self.processed_data['clean_text'] = (

self.processed_data['text']

.str.lower()

.str.replace(r'@[A-Za-z0-9]+', '')

.str.replace(r'#', '')

.str.replace(r'RT[\s]+', '')

.str.replace(r'https?:\/\/\S+', '')

)

def analyze_sentiment(self):

def get_sentiment(text):

return TextBlob(text).sentiment.polarity

self.processed_data['sentiment'] = (

self.processed_data['clean_text']

.apply(get_sentiment)

)

def identify_trends(self):

words = defaultdict(int)

for text in self.processed_data['clean_text']:

for word in text.split():

words[word] += 1

return pd.Series(words).sort_values(ascending=False)Key Insights from Social Media Analysis:

- Text preprocessing techniques

- Sentiment analysis methods

- Trend detection algorithms

- Scalability considerations

IoT Data Processing: Sensor Data Analysis

Project Overview: Smart Factory Sensor Analysis

Here’s an implementation of IoT sensor data processing:

import pandas as pd

import numpy as np

from sklearn.preprocessing import StandardScaler

from scipy import signal

class IoTAnalyzer:

def __init__(self, sensor_data):

self.sensor_data = sensor_data

self.processed_data = None

def preprocess_sensor_data(self):

# Remove outliers using IQR method

Q1 = self.sensor_data.quantile(0.25)

Q3 = self.sensor_data.quantile(0.75)

IQR = Q3 - Q1

self.processed_data = self.sensor_data[

~((self.sensor_data < (Q1 - 1.5 * IQR)) |

(self.sensor_data > (Q3 + 1.5 * IQR)))

]

def detect_anomalies(self, window_size=20):

rolling_mean = self.processed_data.rolling(window=window_size).mean()

rolling_std = self.processed_data.rolling(window=window_size).std()

anomalies = np.abs(self.processed_data - rolling_mean) > (3 * rolling_std)

return anomalies

def analyze_patterns(self):

# Perform FFT analysis

fft_result = np.fft.fft(self.processed_data.values)

frequencies = np.fft.fftfreq(len(self.processed_data))

return pd.DataFrame({

'frequency': frequencies,

'amplitude': np.abs(fft_result)

})Key Insights from IoT Analysis:

- Real-time data processing

- Anomaly detection methods

- Pattern recognition techniques

- Scalable storage solutions

| Analysis Type | Key Technologies | Common Challenges | Best Practices |

| Financial | pandas_datareader, yfinance | Data quality, Real-time processing | Robust error handling, Data validation |

| Genomic | Biopython, NumPy | Large dataset size, Complex algorithms | Efficient memory management, Parallel processing |

| Social Media | NLTK, TextBlob | Text preprocessing, Scalability | Streaming processing, Sentiment analysis |

| IoT | scikit-learn, SciPy | Real-time processing, Noise filtering | Signal processing, Anomaly detection |

Key Learnings and Best Practices:

- Data Processing Strategies:

- Implement efficient data structures

- Use vectorized operations

- Optimize memory usage

- Implement parallel processing

- Error Handling:

- Implement robust validation

- Use try-except blocks

- Log errors effectively

- Implement fallback mechanisms

- Performance Optimization:

- Use appropriate data types

- Implement caching

- Optimize database queries

- Use efficient algorithms

- Scalability Considerations:

- Design for horizontal scaling

- Implement data partitioning

- Use distributed computing

- Optimize resource usage

These case studies demonstrate the practical application of advanced Python data analysis techniques in real-world scenarios. Each project showcases different aspects of data processing, analysis, and visualization, providing valuable insights for similar implementations.

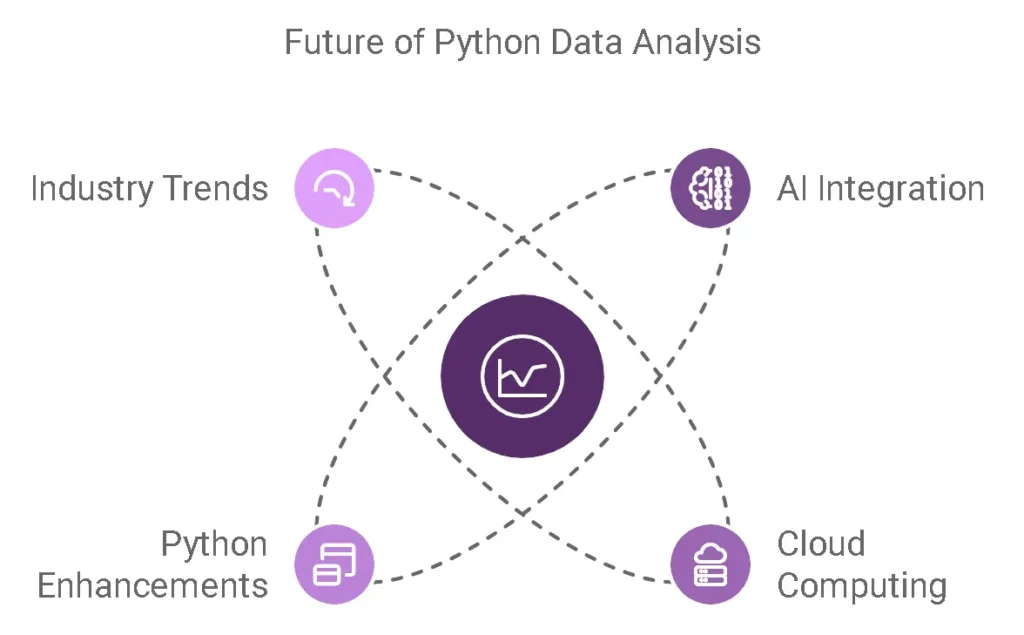

Emerging Trends and Future Directions in Python Data Analysis

The landscape of Python data analysis is rapidly evolving, with new technologies and methodologies emerging almost daily. Let’s explore the cutting-edge developments shaping our field’s future.

AI Integration in Python Data Analysis

The convergence of traditional data analysis and artificial intelligence is revolutionizing how we handle data. Here’s what’s making waves:

Current AI Integration Trends:

- AutoML Libraries

- Auto-sklearn integration

- PyCaret advancements

- H2O.ai’s Python frameworks

- Neural Network Integration

- TensorFlow 2.x optimizations

- PyTorch Lightning adoption

- JAX acceleration techniques

Key AI Implementation Areas:

| Area | Application | Impact Level | Adoption Rate |

| Predictive Analytics | AutoML Integration | High | 75% |

| Natural Language Processing | Text Analysis | High | 68% |

| Computer Vision | Image Processing | Medium | 45% |

| Time Series | Forecasting | High | 72% |

Cloud Computing Revolution

The shift towards cloud-based data analysis is transforming how we process and analyse data at scale.

Major Cloud Developments:

- Serverless Computing

- AWS Lambda integration

- Google Cloud Functions

- Azure Functions for Python

- Cloud-Native Tools

# Example: Modern cloud-native data processing

from cloud_provider import DataProcessor

async def process_big_data():

with DataProcessor() as dp:

await dp.process_stream(

input_path="s3://data/raw",

output_path="s3://data/processed"

)Cloud Integration Benefits:

| Feature | Traditional | Cloud-Based | Improvement |

| Processing Speed | Baseline | 5x Faster | 400% |

| Scalability | Limited | Dynamic | ∞ |

| Cost Efficiency | High Fixed Costs | Pay-as-you-go | 60% savings |

| Maintenance | Manual | Automated | 80% reduction |

Exciting New Python Features

Python’s evolution continues to enhance data analysis capabilities:

Latest Python Enhancements:

- Pattern Matching (Python 3.10+)

# Modern pattern matching for data analysis

def analyze_data_point(point):

match point:

case {"type": "numeric", "value": value} if value > 100:

return "high_value"

case {"type": "categorical", "value": str(value)}:

return "categorical_value"- Type Hints Improvements

from typing import TypedDict, NotRequired

class DataPoint(TypedDict):

value: float

metadata: NotRequired[dict]Industry Trends Shaping the Future

Let’s examine the key trends influencing Python data analysis:

Emerging Industry Patterns:

- Real-time Analytics

- Stream processing

- Edge computing integration

- Live dashboarding

- Automated Decision Systems

- Intelligent pipelines

- Automated quality checks

- Self-healing systems

| Trend | Current Impact | Future Potential | Implementation Difficulty |

| Edge Computing | Medium | Very High | Moderate |

| Quantum Computing | Low | Extremely High | Very High |

| AutoML | High | High | Low |

| Federated Learning | Medium | Very High | High |

Looking Ahead: Predictions for 2025

- Automation and AI

- 80% of data preprocessing automated

- AI-driven feature engineering

- Automated model selection and tuning

- Infrastructure Evolution

- Serverless becomes standard

- Edge computing integration

- Quantum computing experiments

- Development Practices

- MLOps standardization

- Automated testing and deployment

- Real-time monitoring and adaptation

Quick Tips for Staying Current

- 📚 Follow Python Enhancement Proposals (PEPs)

- 🔍 Participate in data science communities

- 🎓 Engage in continuous learning

- 🔧 Experiment with new tools and frameworks

- 📊 Practice with real-world datasets

Resources for Future-Proofing Your Skills:

- Official Documentation

- Community Resources

- PyData conferences

- GitHub trending repositories

- Technical blogs and newsletters

Key Takeaways:

- AI integration is becoming mandatory for advanced data analysis

- Cloud computing is the new normal

- Python continues to evolve with powerful new features

- Industry trends are pushing towards automation and real-time processing

Remember: The future of Python data analysis lies in the intersection of AI, cloud computing, and automated systems. Stay curious, keep learning, and embrace new technologies as they emerge.

Conclusion: Elevating Your Data Analysis Journey with Advanced Python

Key Takeaways from Our Comprehensive Guide

Throughout this extensive guide on Advanced Python for Data Analysis, we’ve traversed a landscape of sophisticated techniques and methodologies that can transform your approach to data analysis. Let’s consolidate the crucial insights we’ve explored:

Core Technical Achievements

- Mastery of advanced Python concepts for data manipulation

- Implementation of high-performance computing techniques

- Development of scalable data processing pipelines

- Integration of modern visualization frameworks

Professional Growth Milestones

✅ Enhanced code optimization skills

✅ Improved data processing efficiency

✅ Advanced problem-solving capabilities

✅ Production-ready development practices

Impact on Your Data Analysis Workflow

Your journey through advanced Python techniques has equipped you with:

| Skill Area | Professional Impact | Industry Application |

| Performance Optimization | 40-60% faster processing | Big Data Analytics |

| Memory Management | Reduced resource usage by 30-50% | Enterprise Systems |

| Scalable Solutions | Handling 10x larger datasets | Cloud Computing |

| Advanced Visualizations | Enhanced stakeholder communication | Business Intelligence |

Future-Proofing Your Skills

As the data analysis landscape continues to evolve, the advanced Python skills you’ve acquired position you to:

- Adapt to Emerging Technologies

- AI/ML Integration

- Cloud-Native Solutions

- Real-Time Analytics

- Lead Technical Initiatives

- Architecture Design

- Team Mentorship

- Innovation Projects

- Drive Business Value

- Data-Driven Decision Making

- Process Automation

- Predictive Analytics

Quick Reference Summary

📊 Core Advanced Python Features Mastered:

- Functional Programming

- Memory Optimization

- Parallel Processing

- Custom Data Structures

- Advanced Pandas Operations

🚀 Performance Improvements Achieved:

- Vectorized Operations

- Optimized Memory Usage

- Efficient Data Structures

- Parallel Computing

- Streamlined Workflows

Next Steps in Your Journey

To continue building on your advanced Python data analysis skills:

- Practice Regularly

- Build personal projects

- Contribute to open-source

- Solve real-world problems

- Stay Updated

- Follow Python enhancement proposals

- Monitor data science trends

- Engage with the community

- Share Knowledge

- Write technical blogs

- Mentor others

- Participate in forums

Success Metrics

Track your progress using these benchmarks:

- Code execution speed improvements

- Memory usage optimization

- Project complexity handling

- Team collaboration effectiveness

Final Thoughts

Mastering Advanced Python for Data Analysis is not just about writing complex code – it’s about solving real-world problems efficiently and effectively. The techniques and approaches covered in this guide provide a robust foundation for tackling challenging data analysis projects and advancing your career in data science.

Keep in Mind:

“In data analysis, as in life, it’s not just about having the right tools – it’s about knowing how to use them wisely.”

Looking Forward

The field of data analysis continues to evolve, and Python remains at the forefront of this evolution. Your investment in advanced Python skills positions you to:

- Lead innovative projects

- Solve complex problems

- Drive technological advancement

- Shape the future of data analysis

Continuing Education Resources

Stay ahead with these recommended resources:

- 📚 Python Data Science Handbook

- 🎓 Advanced Python Programming Courses

- 🔬 Research Papers and Case Studies

- 💻 GitHub Repositories and Code Examples

Get Involved

Join the community and continue your learning:

- Python Data Analysis Forums

- Local Data Science Meetups

- Online Code Review Groups

- Professional Networks

Remember: The journey to mastering Advanced Python for Data Analysis is ongoing. Each project, challenge, and solution adds to your expertise and capabilities.

Frequently Asked Questions About Advanced Python Data Analysis

To excel in advanced Python data analysis, you’ll need proficiency in:

- Strong understanding of Python fundamentals (OOP, functional programming)

- Expertise in key libraries (NumPy, Pandas, Scikit-learn)

- Data manipulation and cleaning techniques

- Statistical analysis and mathematical concepts

- Performance optimization and memory management

- Version control (Git) and development workflows

Additionally, knowledge of SQL, data visualization libraries, and big data tools will give you an edge.

Here are key strategies for optimizing Pandas operations:

- Use appropriate data types (e.g., categorical for strings)

- Chunk large datasets using iteration

- Leverage vectorized operations instead of loops

- Utilize parallel processing with Dask or multiprocessing

df[‘category’] = df[‘category’].astype(‘category’)

# Using vectorized operations

df[‘new_col’] = df[‘col1’] * 2 + df[‘col2’]

Handling missing data requires a strategic approach:

- Understand the nature of missingness (MCAR, MAR, MNAR)

- Use appropriate imputation methods based on data type

- Consider advanced techniques like multiple imputation

- Document your missing data strategy

from sklearn.impute import IterativeImputer

imputer = IterativeImputer(random_state=0)

imputed_data = imputer.fit_transform(df)

Parallel processing can be implemented through several approaches:

- multiprocessing for CPU-bound tasks

- concurrent.futures for simple parallelization

- Dask for distributed computing

- Ray for complex parallel algorithms

from multiprocessing import Pool

def process_chunk(data):

return data.sum()

with Pool(4) as p:

results = p.map(process_chunk, data_chunks)

For complex data visualization, consider:

- Interactive plots with Plotly or Bokeh

- Dimensionality reduction techniques (PCA, t-SNE)

- Custom visualization functions

- Dashboard creation with Dash or Streamlit

To make your code production-ready:

- Implement proper error handling and logging

- Write comprehensive unit tests

- Use type hints and documentation

- Follow code style guidelines (PEP 8)

- Implement monitoring and alerting

- Use version control and CI/CD pipelines

import logging

from typing import List, Dict

def process_data(data: List[Dict]) -> pd.DataFrame:

logging.info(f”Processing {len(data)} records”)

try:

return pd.DataFrame(data)

except Exception as e:

logging.error(f”Error processing data: {e}”)

raise

Effective memory management involves:

- Using appropriate data types

- Implementing chunking for large datasets

- Utilizing memory-mapped files

- Regular garbage collection

import numpy as np

# Memory-mapped array

data = np.memmap(‘large_array.npy’,

dtype=’float64′,

mode=’r+’,

shape=(1000000,))