Machine Learning Techniques: Comprehensive Guide

In the rapidly evolving landscape of technology, machine learning techniques have emerged as powerful tools that are revolutionizing industries and reshaping our world. This comprehensive guide delves into the intricate world of machine learning, exploring its fundamental concepts, advanced techniques, and real-world applications.

What are machine learning techniques?

Machine learning techniques are algorithmic approaches that enable computers to learn from data and improve their performance on specific tasks without being explicitly programmed. These techniques form the backbone of artificial intelligence (AI) systems, allowing them to recognize patterns, make predictions, and solve complex problems.

At its core, machine learning is about developing algorithms that can:

- Learn from experience: Improve performance as more data is processed

- Generalize: Apply learned knowledge to new, unseen situations

- Adapt: Modify behavior based on changing environments or requirements

Machine learning techniques encompass a wide range of approaches, from simple linear regression models to complex deep neural networks. Each technique has its strengths and is suited for different types of problems and data.

The evolution and importance of machine learning in today’s world

The journey of machine learning from theoretical concept to practical application has been nothing short of extraordinary. Let’s explore this evolution through an interactive timeline:

Alan Turing proposes the Turing Test

Frank Rosenblatt designs the Perceptron

Neocognitron, a precursor to CNNs, is introduced

IBM’s Deep Blue defeats world chess champion

Deep learning breakthrough in image recognition

AlphaGo defeats world Go champion

GPT-3 demonstrates advanced language understanding

Machine learning techniques continue to evolve

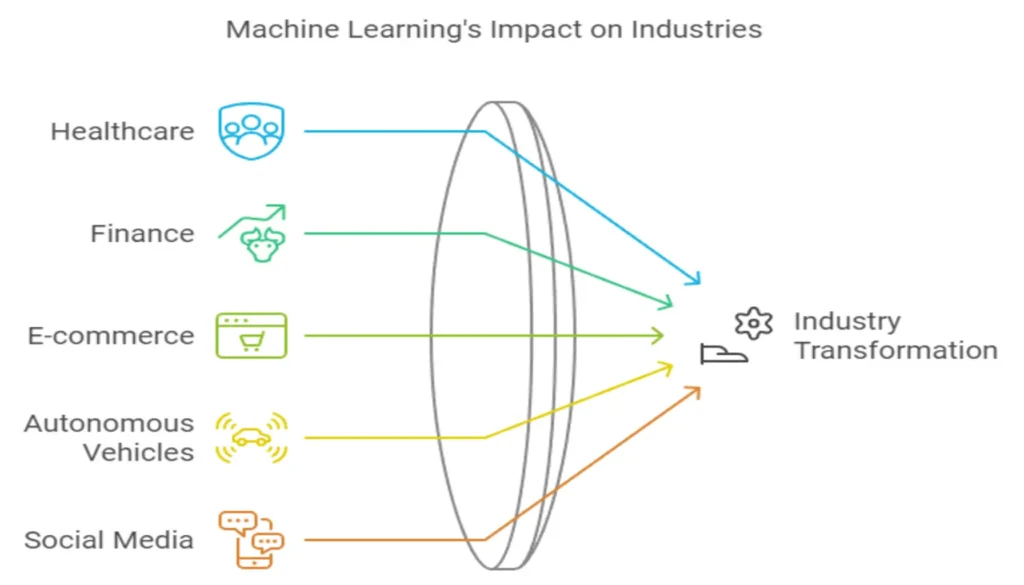

The importance of machine learning in today’s world cannot be overstated. It has become a cornerstone of innovation across various sectors:

- Healthcare: ML techniques are used for disease diagnosis, drug discovery, and personalized treatment plans.

- Finance: Fraud detection, algorithmic trading, and credit scoring rely heavily on ML algorithms.

- E-commerce: Recommendation systems and customer behavior analysis are powered by ML.

- Transportation: Self-driving cars and traffic prediction systems utilize advanced ML techniques.

- Entertainment: Content recommendation on streaming platforms is driven by sophisticated ML models.

As we continue to generate vast amounts of data, the role of machine learning in extracting meaningful insights and driving decision-making processes becomes increasingly crucial.

Brief history of machine learning and AI

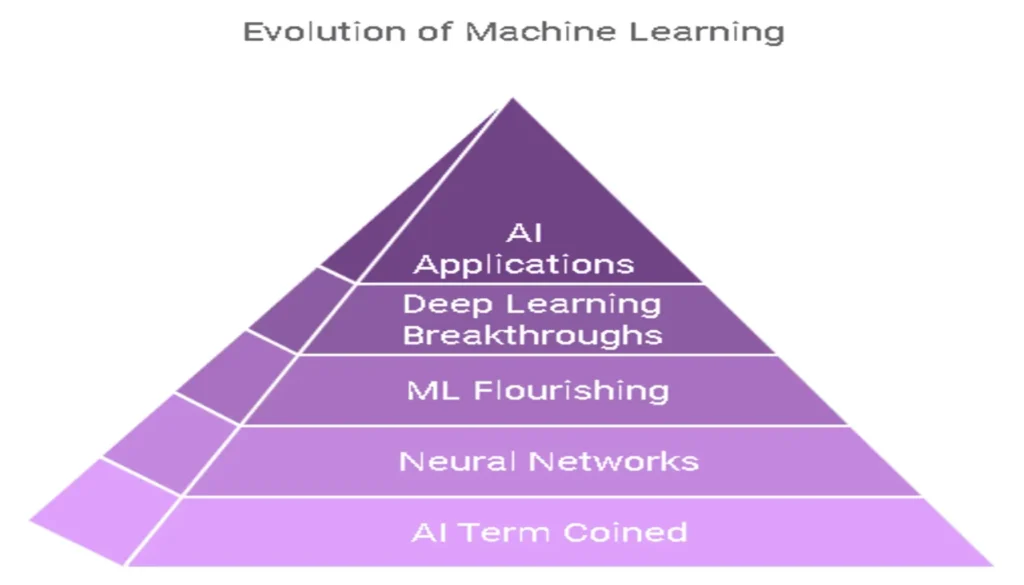

The roots of machine learning and artificial intelligence can be traced back to the mid-20th century. Here’s a brief overview of key milestones:

- 1950s: The term “Artificial Intelligence” is coined, and early AI research focuses on problem-solving and symbolic methods.

- 1960s-1970s: Development of neural network architectures and the concept of backpropagation.

- 1980s-1990s: Machine learning begins to flourish with the introduction of decision trees, support vector machines, and the revival of neural networks.

- 2000s: Rapid advancements in computational power and data availability lead to breakthroughs in deep learning.

- 2010s-Present: Explosion of AI applications across industries, with significant progress in natural language processing, computer vision, and reinforcement learning.

This rich history has laid the foundation for the diverse and powerful machine learning techniques we use today. As we delve deeper into this guide, we’ll explore these techniques in detail, understanding their principles, applications, and the future they’re shaping.

Learn more about the history of AI and machine learning

In the next section, we’ll dive into the fundamentals of machine learning, exploring the different types of learning and key concepts that form the basis of all machine learning techniques.

Fundamentals of Machine Learning

Understanding the fundamentals of machine learning is crucial for grasping the intricacies of various machine learning techniques. This section will delve into the different types of machine learning and key concepts that form the foundation of this field.

Types of Machine Learning

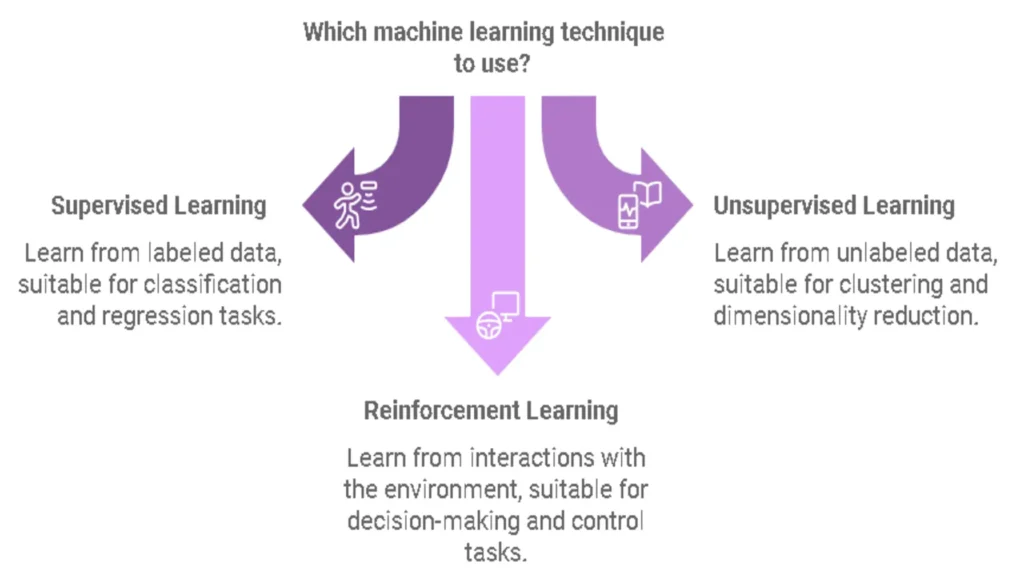

Machine learning can be broadly categorized into four main types, each with its unique approach to learning from data:

Supervised Learning

Supervised learning is perhaps the most common type of machine learning. In this approach, the algorithm learns from labeled data, where both the input features and the corresponding output labels are provided.

Key characteristics of supervised learning:

- Requires labeled training data

- Learns to map inputs to outputs

- Aims to predict outcomes for new, unseen data

Common applications:

- Image classification

- Spam detection

- Sales prediction

Example: Home Price Prediction

A supervised learning algorithm could be trained on historical home sale data, where features might include square footage, number of bedrooms, and location. The label would be the sale price. Once trained, the model could predict prices for new homes based on their features.

Unsupervised Learning

Unsupervised learning deals with unlabeled data. The algorithm tries to find patterns or structures within the data without any predefined labels.

Key characteristics of unsupervised learning:

- Works with unlabeled data

- Discovers hidden patterns or structures

- Often used for exploratory data analysis

Common applications:

- Customer segmentation

- Anomaly detection

- Topic modeling in text analysis

Reinforcement Learning

Reinforcement learning is inspired by behaviorist psychology. It involves an agent that learns to make decisions by performing actions in an environment to maximize a reward.

Key characteristics of reinforcement learning:

- Involves an agent interacting with an environment

- Learns through trial and error

- Aims to maximize a cumulative reward

Common applications:

- Game playing (e.g., AlphaGo)

- Robotics

- Autonomous driving

Semi-Supervised Learning

Semi-supervised learning is a hybrid approach that uses both labeled and unlabeled data for training, typically a small amount of labeled data and a large amount of unlabeled data.

Key characteristics of semi-supervised learning:

- Combines aspects of supervised and unsupervised learning

- Useful when labeling data is expensive or time-consuming

- Can improve learning accuracy

Common applications:

- Speech analysis

- Internet content classification

- Protein sequence classification

| Learning Type | Data | Goal | Example Application |

|---|---|---|---|

| Supervised | Labeled | Prediction | Email spam detection |

| Unsupervised | Unlabeled | Pattern discovery | Customer segmentation |

| Reinforcement | Rewards | Decision making | Game strategies |

| Semi-supervised | Partially labeled | Improved accuracy | Image classification with limited labels |

Key Concepts in Machine Learning

To effectively apply machine learning techniques, it’s essential to understand several fundamental concepts:

Features and Labels

- Features (also called predictors or independent variables) are the input variables used to make predictions.

- Labels (also called targets or dependent variables) are the output variables that the model aims to predict in supervised learning.

Example: In a house price prediction model:

- Features might include square footage, number of bedrooms, location, etc.

- The label would be the house price.

Training, Validation, and Testing Data

To build and evaluate machine learning models, we typically split our data into three sets:

- Training data: Used to train the model

- Validation data: Used to tune model hyperparameters and prevent overfitting

- Testing data: Used to evaluate the final model performance

Model Evaluation Metrics

Different metrics are used to evaluate model performance, depending on the type of problem:

- Regression metrics: Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE)

- Classification metrics: Accuracy, Precision, Recall, F1-Score, ROC AUC

- Clustering metrics: Silhouette Score, Calinski-Harabasz Index

Learn more about model evaluation metrics

Overfitting and Underfitting

- Overfitting occurs when a model learns the training data too well, including its noise and peculiarities, leading to poor generalization on new data.

- Underfitting happens when a model is too simple to capture the underlying pattern in the data, resulting in poor performance on both training and new data.

Underfitting

Good Fit

Overfitting

Understanding these fundamental concepts is crucial for effectively applying machine learning techniques. They form the foundation upon which more advanced topics and sophisticated algorithms are built.

In the next section, we’ll delve into essential machine learning techniques, exploring how these fundamental concepts are applied in practice to solve real-world problems.

Essential Machine Learning Techniques

In this section, we’ll explore the foundational techniques that form the backbone of machine learning. These methods are essential for any data scientist or machine learning practitioner to understand and master.

Regression Techniques

Regression techniques are fundamental machine learning algorithms used to predict continuous numerical values. They’re widely applied in forecasting, trend analysis, and understanding relationships between variables.

Linear Regression

Linear regression is the simplest and most widely used regression technique. It models the relationship between a dependent variable and one or more independent variables by fitting a linear equation to the observed data.

Key Concepts:

- Simple Linear Regression: One independent variable

- Multiple Linear Regression: Two or more independent variables

- Ordinary Least Squares (OLS): Method to estimate the parameters

import numpy as np

from sklearn.linear_model import LinearRegression

# Sample data

X = np.array([[1], [2], [3], [4], [5]])

y = np.array([2, 4, 5, 4, 5])

# Create and fit the model

model = LinearRegression()

model.fit(X, y)

# Make predictions

X_new = np.array([[6]])

y_pred = model.predict(X_new)

print(f"Prediction for X=6: {y_pred[0]:.2f}")

Polynomial Regression

Polynomial regression extends linear regression by modeling the relationship between the independent and dependent variables as an nth degree polynomial.

Use Cases:

- Modeling non-linear relationships

- Capturing complex patterns in data

Did You Know?

Polynomial regression can lead to overfitting if the degree of the polynomial is too high. Always validate your model using techniques like cross-validation.

Logistic Regression

Despite its name, logistic regression is primarily used for classification problems. It predicts the probability of an instance belonging to a particular class.

Key Features:

- Binary and multi-class classification

- Outputs probabilities between 0 and 1

- Uses the logistic (sigmoid) function

Learn more about regression techniques

Classification Techniques

Classification techniques are used to predict discrete class labels for new instances based on past observations.

Decision Trees

Decision trees are versatile algorithms used for both classification and regression tasks. They make decisions by splitting the data based on feature values.

Advantages:

- Easy to interpret and visualize

- Can handle both numerical and categorical data

- Requires little data preparation

Random Forests

Random forests are an ensemble learning method that constructs multiple decision trees and merges them to get a more accurate and stable prediction.

Key Concepts:

- Bagging: Bootstrap aggregating for dataset creation

- Feature randomness: Subset of features considered at each split

- Voting: Majority vote for classification, average for regression

Support Vector Machines (SVM)

SVMs are powerful algorithms used for classification, regression, and outlier detection. They work by finding the hyperplane that best separates classes in a high-dimensional space.

Types of SVMs:

- Linear SVM

- Non-linear SVM (using kernel tricks)

- Multi-class SVM

Clustering Techniques

Clustering is an unsupervised learning technique used to group similar data points together without predefined labels.

K-means Clustering

K-means is a popular clustering algorithm that partitions n observations into k clusters, where each observation belongs to the cluster with the nearest mean (centroid).

Algorithm Steps:

- Initialize k centroids randomly

- Assign each data point to the nearest centroid

- Recalculate centroids based on assigned points

- Repeat steps 2-3 until convergence

K-means Clustering Simulator

Try our interactive K-means clustering simulator to understand how the algorithm works!

Hierarchical Clustering

Hierarchical clustering creates a tree-like hierarchy of clusters, allowing for different levels of granularity in data grouping.

Types:

- Agglomerative (bottom-up approach)

- Divisive (top-down approach)

DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

DBSCAN groups together points that are closely packed together, marking points that lie alone in low-density regions as outliers.

Advantages:

- Does not require specifying the number of clusters a priori

- Can find arbitrarily shaped clusters

- Robust to outliers

Dimensionality Reduction Techniques

Dimensionality reduction techniques are used to reduce the number of features in a dataset while retaining most of the important information.

Principal Component Analysis (PCA)

PCA is a statistical procedure that uses orthogonal transformation to convert a set of observations of possibly correlated variables into a set of values of linearly uncorrelated variables called principal components.

Key Concepts:

- Variance explanation

- Eigenvectors and eigenvalues

- Feature importance

t-SNE (t-Distributed Stochastic Neighbor Embedding)

t-SNE is a machine learning algorithm for visualization developed by Laurens van der Maaten and Geoffrey Hinton. It is particularly well suited for the visualization of high-dimensional datasets.

Use Cases:

- Visualizing high-dimensional data in 2D or 3D space

- Exploring cluster structures in data

Ensemble Methods

Ensemble methods combine multiple machine learning models to produce better predictive performance than could be obtained from any of the constituent models alone.

Bagging

Bagging, short for Bootstrap Aggregating, involves training multiple instances of the same algorithm on different subsets of the training data and then aggregating their predictions.

Popular Bagging Algorithm:

- Random Forest (combination of bagging with decision trees)

Boosting (including Gradient Boosting Machines)

Boosting methods train models sequentially, with each new model focusing on the errors of the previous ones.

Popular Boosting Algorithms:

- AdaBoost

- Gradient Boosting (e.g., XGBoost, LightGBM)

| Algorithm | Pros | Cons |

|---|---|---|

| AdaBoost | Simple to implement, automatically handles feature selection | Sensitive to noisy data and outliers |

| Gradient Boosting | High performance, flexible loss function | Can overfit if not properly tuned |

Stacking

Stacking involves training multiple different models and then training a meta-model to combine their predictions.

Steps in Stacking:

- Train base models on the original dataset

- Make predictions with base models

- Use these predictions as features to train a meta-model

Explore more about ensemble methods

These essential machine learning techniques form the foundation of many advanced algorithms and applications. By understanding these methods, data scientists and machine learning practitioners can tackle a wide range of problems and develop innovative solutions.

In the next section, we’ll explore more advanced machine learning techniques, including deep learning and natural language processing.

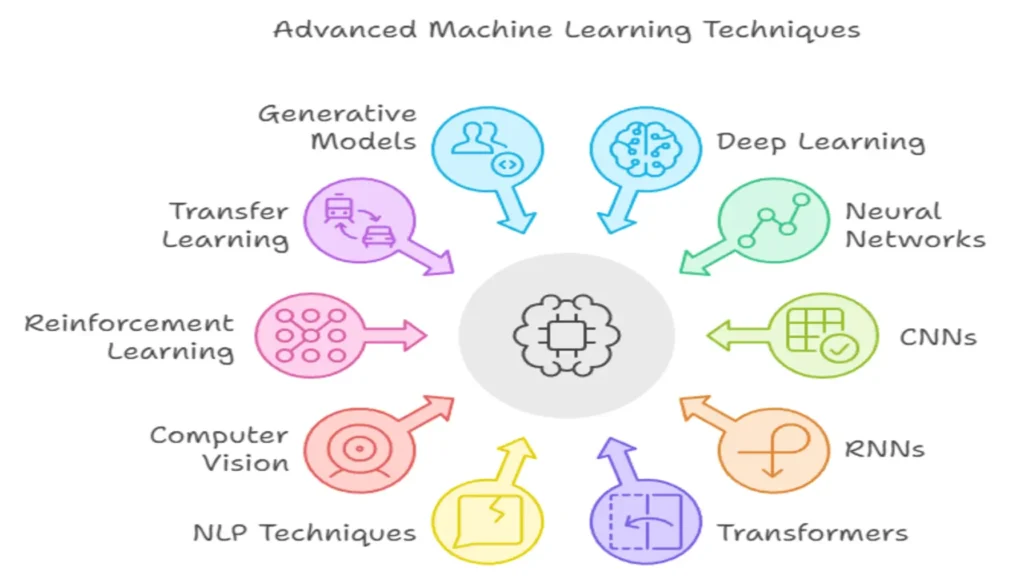

Advanced Machine Learning Techniques

As we delve deeper into the world of machine learning, we encounter a realm of sophisticated techniques that push the boundaries of what’s possible with artificial intelligence. These advanced machine learning techniques have revolutionized various fields and continue to drive innovation across industries.

Deep Learning Techniques

Deep learning, a subset of machine learning, has emerged as a powerhouse in solving complex problems. It’s inspired by the structure and function of the human brain, using artificial neural networks to learn from vast amounts of data.

Evolution of Machine Learning Techniques

1950s: Early Concepts of AI

Initial developments in machine learning algorithms like perceptrons.

1990s: Rise of Neural Networks

Development of backpropagation and deeper networks.

2010s: Deep Learning Revolution

Introduction of CNNs, RNNs, and large-scale datasets.

2020s: Transformer Models

Transformers like BERT and GPT dominate NLP and AI research.

Neural Network Architectures

Neural networks are the backbone of deep learning. They consist of interconnected layers of nodes (neurons) that process and transmit information. The basic structure includes:

- Input Layer: Receives the initial data

- Hidden Layers: Process the information

- Output Layer: Produces the final result

The number of hidden layers and neurons in each layer can vary, leading to different architectures suited for various tasks.

Convolutional Neural Networks (CNN)

CNNs have revolutionized the field of computer vision. They’re designed to automatically and adaptively learn spatial hierarchies of features from input images.

Key components of CNNs include:

- Convolutional layers: Apply filters to detect features

- Pooling layers: Reduce spatial dimensions

- Fully connected layers: Perform high-level reasoning

CNNs have achieved remarkable success in tasks such as image classification, object detection, and facial recognition.

Learn more about CNNs and their applications

Recurrent Neural Networks (RNN)

RNNs are designed to work with sequence data, making them ideal for tasks involving time series or natural language. They have connections that form directed cycles, allowing them to maintain an internal state or “memory”.

Types of RNNs include:

- Long Short-Term Memory (LSTM)

- Gated Recurrent Units (GRU)

These architectures help mitigate the vanishing gradient problem, allowing RNNs to learn long-term dependencies.

Deep Learning Techniques Comparison

| Technique | Primary Use | Key Advantage |

|---|---|---|

| Convolutional Neural Networks (CNN) | Image Classification | High accuracy in image tasks |

| Recurrent Neural Networks (RNN) | Sequential Data | Memory for sequence-based tasks |

| Transformers | Natural Language Processing | Efficient at handling long-range dependencies |

Transformers and Attention Mechanisms

Transformers have become the go-to architecture for many NLP tasks. They rely heavily on attention mechanisms, which allow the model to focus on different parts of the input when producing each part of the output.

Key innovations in Transformers include:

- Self-attention: Relates different positions of a single sequence

- Multi-head attention: Allows the model to focus on different aspects of the input simultaneously

Transformers have led to breakthroughs in language models like BERT and GPT.

Natural Language Processing (NLP) Techniques

NLP is a field at the intersection of linguistics, in computer science, and AI, focused on enabling computers to understand, interpret, and generate human language.

Word Embeddings

Word embeddings are dense vector representations of words that capture semantic relationships. Popular techniques include:

- Word2Vec

- GloVe (Global Vectors for Word Representation)

- FastText

These embeddings form the foundation for many NLP tasks, allowing models to understand the context and meaning of words.

Sentiment Analysis

Sentiment analysis involves determining the emotional tone behind a series of words, used to gain an understanding of attitudes, opinions, and emotions.

Applications of sentiment analysis include:

- Social media monitoring

- Customer feedback analysis

- Market research

Named Entity Recognition (NER)

NER is the task of identifying and classifying named entities (e.g., person names, organizations, locations) in text. It’s crucial for information extraction and question answering systems.

Techniques used in NER include:

- Rule-based systems

- Machine learning approaches (e.g., Conditional Random Fields)

- Deep learning models (e.g., Bi-LSTM-CRF)

Language Models (e.g., BERT, GPT)

Advanced language models have transformed NLP tasks. Two prominent examples are:

- BERT (Bidirectional Encoder Representations from Transformers): Excels at understanding context in both directions

- GPT (Generative Pre-trained Transformer): Powerful in generating human-like text

These models have achieved state-of-the-art results on a wide range of NLP tasks, from question answering to text generation.

Explore the impact of BERT on NLP

Computer Vision Techniques

Computer vision aims to replicate the power of human vision, enabling machines to interpret and understand visual information from the world.

Image Classification

Image classification involves assigning a label to an entire image. Deep learning models, particularly CNNs, have achieved human-level performance on many image classification tasks.

Popular image classification architectures include:

- ResNet

- Inception

- EfficientNet

Object Detection

Object detection goes beyond classification by identifying and locating multiple objects within an image. Key algorithms include:

- R-CNN (Region-based Convolutional Neural Networks) and its variants

- YOLO (You Only Look Once)

- SSD (Single Shot Detector)

These techniques have applications in autonomous vehicles, surveillance, and robotics.

Semantic Segmentation

Semantic segmentation involves assigning a class label to each pixel in an image. This technique is crucial for tasks requiring detailed scene understanding.

Applications of semantic segmentation include:

- Medical image analysis

- Autonomous driving

- Augmented reality

Reinforcement Learning Techniques

Reinforcement learning (RL) is a type of machine learning where an agent learns to make decisions by taking actions in an environment to maximize a reward.

Q-Learning

Q-learning is a model-free reinforcement learning algorithm. It learns a Q-function, which represents the expected reward for taking an action in a given state.

Key concepts in Q-learning include:

- State-Action Value Function (Q-function)

- Exploration vs. Exploitation

- Bellman Equation

Policy Gradients

Policy gradient methods directly optimize the policy without using a value function. They’re particularly useful in continuous action spaces.

Advantages of policy gradients include:

- Can learn stochastic policies

- Effective in high-dimensional action spaces

Deep Reinforcement Learning

Deep reinforcement learning combines deep learning with reinforcement learning, enabling RL to scale to problems with high-dimensional state spaces.

Notable deep RL algorithms include:

- Deep Q-Network (DQN)

- Proximal Policy Optimization (PPO)

- Soft Actor-Critic (SAC)

These techniques have achieved remarkable results in game playing, robotics, and resource management.

Dive deeper into reinforcement learning

Q-Learning Reward Calculator

Transfer Learning and Fine-Tuning

Transfer learning involves using knowledge gained from solving one problem and applying it to a different but related problem. It’s particularly useful when working with limited labeled data.

Benefits of transfer learning include:

- Reduced training time

- Improved performance on small datasets

- Lower computational requirements

Fine-tuning is a specific transfer learning technique where a pre-trained model is further trained on a specific task.

Generative Models

Generative models learn to generate new data that resembles the training data. They’ve gained significant attention for their ability to create realistic images, text, and even music.

Generative Adversarial Networks (GANs)

GANs consist of two neural networks—a generator and a discriminator—that are trained simultaneously through adversarial training.

Applications of GANs include:

- Image-to-image translation

- Super-resolution

- Data augmentation

Variational Autoencoders (VAEs)

VAEs are a class of generative models that learn a compressed representation of the input data. They’re particularly useful for generating diverse samples and learning disentangled representations.

Federated Learning

Federated learning is a machine learning technique that trains algorithms across multiple decentralized devices or servers holding local data samples, without exchanging them.

Key advantages of federated learning:

- Enhanced privacy and security

- Reduced data transfer and storage costs

- Ability to leverage distributed data sources

Quantum Machine Learning

Quantum machine learning is an emerging field that combines quantum computing with machine learning algorithms. It holds the promise of solving certain problems exponentially faster than classical computers.

Potential applications of quantum machine learning include:

- Optimization problems

- Sampling from high-dimensional probability distributions

- Quantum-enhanced feature spaces

While still in its early stages, quantum machine learning represents an exciting frontier in the field of AI.

These advanced machine learning techniques represent the cutting edge of AI research and application. As the field continues to evolve, we can expect these techniques to become more sophisticated, opening up new possibilities for solving complex problems across various domains.

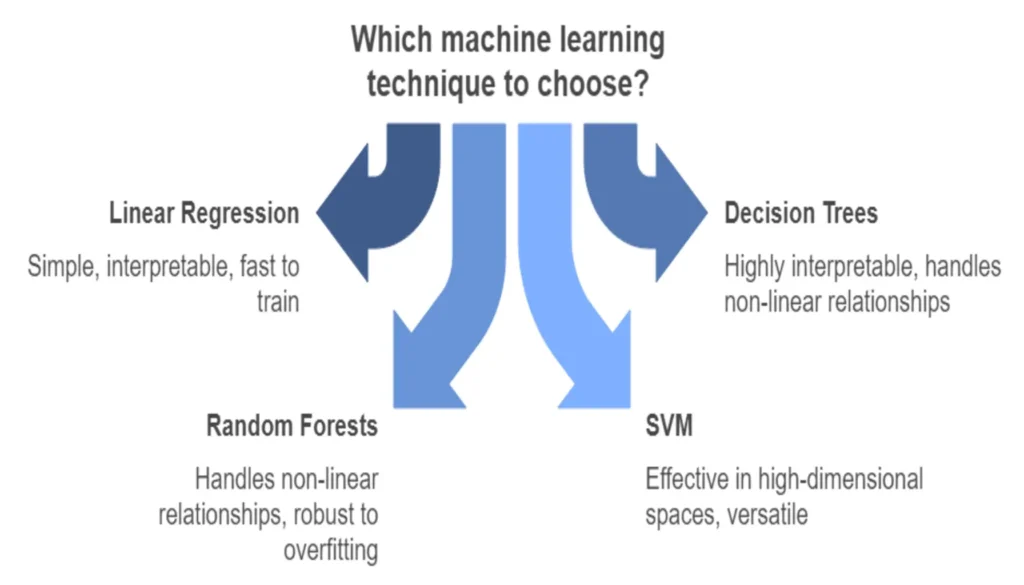

Choosing the Right Machine Learning Technique

Selecting the appropriate machine learning technique is crucial for the success of your project. This decision can significantly impact the performance, efficiency, and interpretability of your model. Let’s explore the key factors to consider, compare different techniques, and discuss common pitfalls to avoid.

Factors to consider

When choosing a machine learning technique, several critical factors come into play:

Problem type and dataset characteristics

The nature of your problem and the characteristics of your dataset are primary determinants in selecting the right technique. Consider the following:

- Problem type: Is it a classification, regression, clustering, or dimensionality reduction problem?

- Data type: Are you working with structured data, images, text, or time series?

- Dataset size: Do you have a large dataset or a limited amount of data?

- Feature complexity: Are the relationships between features linear or non-linear?

Here’s a quick reference table to help you match problem types with suitable machine learning techniques:

| Problem Type | Suitable Techniques |

|---|---|

| Classification | Logistic Regression, Decision Trees, Random Forests, Support Vector Machines, Neural Networks |

| Regression | Linear Regression, Polynomial Regression, Decision Trees, Random Forests, Neural Networks |

| Clustering | K-Means, Hierarchical Clustering, DBSCAN, Gaussian Mixture Models |

| Dimensionality Reduction | Principal Component Analysis (PCA), t-SNE, Autoencoders |

Computational resources and scalability

The availability of computational resources and the need for scalability can significantly influence your choice of technique:

- Training time: Some techniques, like deep neural networks, require substantial training time, especially with large datasets.

- Memory requirements: Certain algorithms, such as support vector machines, can be memory-intensive for large datasets.

- Scalability: Consider whether the technique can handle increasing amounts of data efficiently.

Interpretability requirements

The level of interpretability required for your model is another crucial factor:

- Black-box vs. white-box models: Some techniques, like deep neural networks, are considered “black-box” models, making it challenging to explain their decision-making process. Others, like decision trees, are more interpretable.

- Regulatory requirements: In some industries, such as healthcare or finance, model interpretability may be a legal requirement.

- Stakeholder needs: Consider whether your stakeholders need to understand how the model arrives at its predictions.

Pros and cons of different techniques

Let’s examine some popular machine learning techniques and their trade-offs:

- Linear Regression

- Pros: Simple, interpretable, fast to train

- Cons: Assumes linear relationships, sensitive to outliers

- Decision Trees

- Pros: Highly interpretable, handles non-linear relationships

- Cons: Prone to overfitting, can be unstable

- Random Forests

- Pros: Handles non-linear relationships, robust to overfitting

- Cons: Less interpretable than individual decision trees, can be computationally intensive

- Support Vector Machines (SVM)

- Pros: Effective in high-dimensional spaces, versatile (different kernel functions)

- Cons: Not suitable for large datasets, challenging to interpret

- Neural Networks

- Pros: Capable of learning complex patterns, versatile across various data types

- Cons: Require large amounts of data, computationally intensive, often lack interpretability

To help visualize these trade-offs, consider the following interactive element:

Machine Learning Technique Comparison

Common pitfalls and how to avoid them

When implementing machine learning techniques, be aware of these common pitfalls:

- Overfitting

- Pitfall: Model performs well on training data but poorly on new, unseen data.

- Avoidance: Use regularization techniques, cross-validation, and ensure sufficient training data.

- Underfitting

- Pitfall: Model is too simple to capture the underlying patterns in the data.

- Avoidance: Increase model complexity, add more relevant features, or try more sophisticated algorithms.

- Ignoring data quality

- Pitfall: Using poor quality or biased data leads to unreliable models.

- Avoidance: Invest time in data cleaning, preprocessing, and understanding potential biases in your dataset.

- Neglecting feature engineering

- Pitfall: Failing to create or select relevant features can limit model performance.

- Avoidance: Spend time on feature engineering and selection, leveraging domain expertise when possible.

- Improper handling of imbalanced datasets

- Pitfall: Models may perform poorly on minority classes in imbalanced datasets.

- Avoidance: Use techniques like oversampling, undersampling, or synthetic data generation (e.g., SMOTE).

To help you navigate these pitfalls, consider using this checklist:

Machine Learning Implementation Checklist

- Ensure data quality and representativeness

- Perform thorough feature engineering

- Select appropriate model(s) for the problem

- Implement robust cross-validation strategy

- Conduct hyperparameter tuning

- Choose relevant performance metrics

- Consider model interpretability requirements

- Plan for model deployment and monitoring

By carefully considering these factors, understanding the trade-offs between different techniques, and avoiding common pitfalls, you can make informed decisions when choosing and implementing machine learning techniques for your projects.

Learn more about choosing the right machine learning algorithm

In the next section, we’ll delve into the practical aspects of implementing machine learning techniques, exploring popular libraries and frameworks, and walking through the steps of a typical machine learning project.

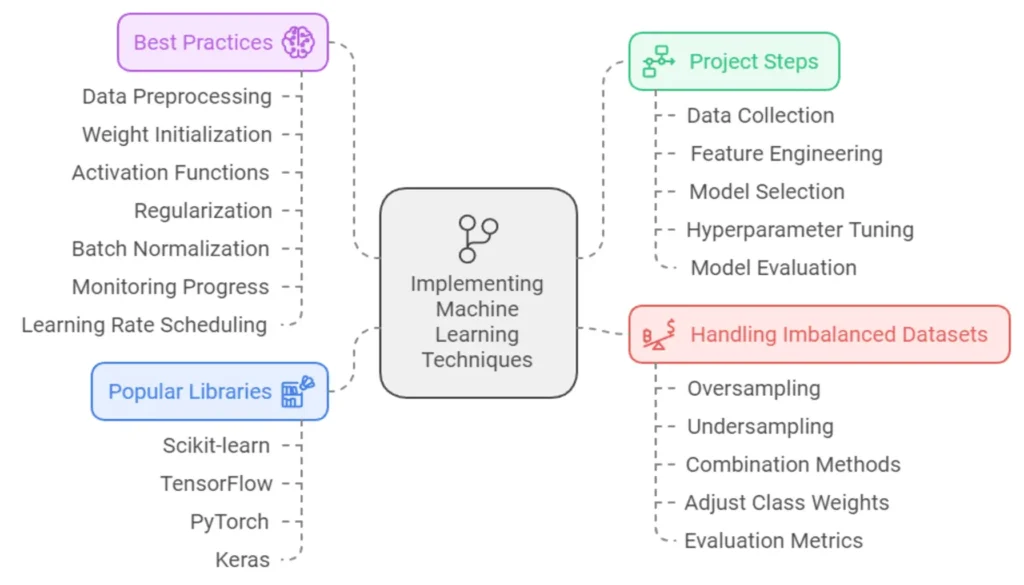

Implementing Machine Learning Techniques

Implementing machine learning techniques effectively requires a combination of the right tools, a structured approach, and best practices. In this section, we’ll explore the popular libraries and frameworks used in machine learning, walk through the steps of a typical machine learning project, and discuss some crucial considerations for successful implementation.

Popular machine learning libraries and frameworks

The field of machine learning is supported by a rich ecosystem of libraries and frameworks that simplify the implementation process. Let’s examine some of the most widely used tools:

Scikit-learn

Scikit-learn is a versatile and user-friendly machine learning library for Python. It provides a wide range of algorithms for classification, regression, clustering, and dimensionality reduction.

Key features:

- Simple and efficient tools for data mining and data analysis

- Accessible to everybody, and reusable in various contexts

- Built on NumPy, SciPy, and matplotlib

- Open source, commercially usable – BSD license

TensorFlow

TensorFlow, developed by Google, is an open-source library for numerical computation and large-scale machine learning. It’s particularly popular for deep learning projects.

Key features:

- Flexible ecosystem of tools, libraries and community resources

- Easily build and deploy machine learning powered applications

- Robust machine learning production anywhere

- Powerful experimentation for research

PyTorch

PyTorch, developed by Facebook’s AI Research lab, is known for its flexibility and dynamic computational graphs, making it popular among researchers.

Key features:

- Production-ready

- Distributed training

- Rich ecosystem of tools and libraries

- Cloud support

Keras

Keras is a high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano.

Key features:

- User-friendly

- Modular and composable

- Easy to extend

- Python-based

Here’s a comparison table of these popular libraries:

| Library | Best For | Learning Curve | Performance | Community Support |

|---|---|---|---|---|

| Scikit-learn | Traditional ML algorithms | Low | Good for small to medium datasets | Large |

| TensorFlow | Deep learning, production deployment | Medium to High | Excellent for large datasets | Very Large |

| PyTorch | Research, dynamic neural networks | Medium | Excellent for large datasets | Large and Growing |

| Keras | Quick prototyping, beginner-friendly | Low | Good, runs on top of other backends | Large |

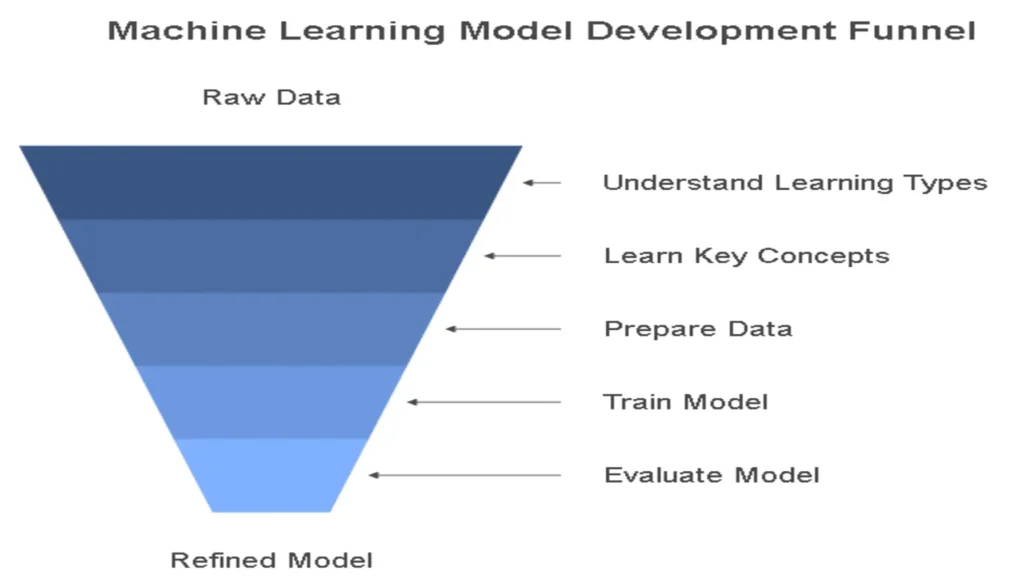

Steps in a typical machine learning project

A successful machine learning project typically follows a structured workflow. Let’s break down the key steps:

Data collection and preprocessing

The first step in any machine learning project is gathering and preparing your data. This involves:

- Collecting data from various sources

- Cleaning the data (handling missing values, removing duplicates)

- Exploratory Data Analysis (EDA) to understand the dataset

- Data normalization or standardization

import pandas as pd

import numpy as np

from sklearn.preprocessing import StandardScaler

# Load data

data = pd.read_csv('dataset.csv')

# Handle missing values

data.fillna(data.mean(), inplace=True)

# Normalize numerical features

scaler = StandardScaler()

data_normalized = pd.DataFrame(scaler.fit_transform(data), columns=data.columns)Feature engineering and selection

Feature engineering involves creating new features or transforming existing ones to improve model performance. Feature selection is the process of choosing the most relevant features for your model.

- Create interaction features

- Perform dimensionality reduction (e.g., PCA)

- Select features based on correlation or importance scores

from sklearn.feature_selection import SelectKBest, f_classif

# Select top 10 features

selector = SelectKBest(f_classif, k=10)

X_selected = selector.fit_transform(X, y)Model selection and training

Choose an appropriate model based on your problem type (classification, regression, clustering) and train it on your prepared dataset.

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

# Split data

X_train, X_test, y_train, y_test = train_test_split(X_selected, y, test_size=0.2, random_state=42)

# Train model

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train, y_train)Hyperparameter tuning (including AutoML)

Optimize your model’s performance by tuning its hyperparameters. This can be done manually or using automated methods.

from sklearn.model_selection import GridSearchCV

param_grid = {

'n_estimators': [100, 200, 300],

'max_depth': [5, 10, None]

}

grid_search = GridSearchCV(RandomForestClassifier(random_state=42), param_grid, cv=5)

grid_search.fit(X_train, y_train)

best_model = grid_search.best_estimator_Model evaluation and interpretation

Assess your model’s performance using appropriate metrics and interpret its predictions.

from sklearn.metrics import accuracy_score, classification_report

y_pred = best_model.predict(X_test)

print(f"Accuracy: {accuracy_score(y_test, y_pred)}")

print(classification_report(y_test, y_pred))Best practices for training neural networks

When working with neural networks, consider these best practices:

- Data preprocessing: Normalize inputs to have zero mean and unit variance.

- Weight initialization: Use techniques like Xavier/Glorot initialization.

- Choose appropriate activation functions: ReLU is often a good default choice.

- Implement regularization: Use techniques like dropout or L2 regularization to prevent overfitting.

- Use batch normalization: This can speed up training and improve generalization.

- Monitor training progress: Use validation sets and early stopping to prevent overfitting.

- Learning rate scheduling: Gradually decrease the learning rate during training.

Handling imbalanced datasets

Imbalanced datasets, where one class significantly outweighs the others, can lead to biased models. Here are some techniques to address this issue:

- Oversampling: Increase the number of minority class samples (e.g., SMOTE).

- Undersampling: Reduce the number of majority class samples.

- Combination methods: Use techniques like SMOTEENN or SMOTETomek.

- Adjust class weights: Give more importance to the minority class during training.

- Use appropriate evaluation metrics: Accuracy can be misleading; consider precision, recall, and F1-score.

from imblearn.over_sampling import SMOTE

from imblearn.under_sampling import RandomUnderSampler

from imblearn.pipeline import Pipeline

# Create a pipeline with SMOTE oversampling and Random Forest classifier

pipeline = Pipeline([

('smote', SMOTE(random_state=42)),

('classifier',

RandomForestClassifier(random_state=42))

])

pipeline.fit(X_train, y_train)Implementing machine learning techniques effectively requires a combination of technical skills, domain knowledge, and best practices. By following these guidelines and continuously experimenting, you can develop robust and accurate machine learning models that solve real-world problems.

Learn more about handling imbalanced datasets

Real-world Applications of Machine Learning Techniques

Machine learning techniques have permeated virtually every industry, revolutionizing processes and unlocking new possibilities. In this section, we’ll explore some of the most impactful applications across various sectors, demonstrating the versatility and power of these techniques.

Healthcare and Biomedical Research

The healthcare industry has been profoundly transformed by machine learning techniques, leading to improved patient care, more accurate diagnoses, and groundbreaking research discoveries.

Disease Diagnosis and Prediction

Machine learning algorithms, particularly deep learning models, have shown remarkable accuracy in diagnosing diseases from medical images. For instance, convolutional neural networks (CNNs) are being used to detect:

- Skin cancer from dermatological images

- Diabetic retinopathy from retinal scans

- Pneumonia from chest X-rays

These models often perform at or above the level of human experts, providing valuable second opinions and enabling early detection of diseases.

Drug Discovery and Development

The pharmaceutical industry is leveraging machine learning to accelerate the drug discovery process. Techniques such as:

- Reinforcement learning for molecular design

- Generative models for creating novel drug candidates

- Natural language processing for mining scientific literature

These approaches are significantly reducing the time and cost associated with bringing new drugs to market.

Personalized Medicine

By analyzing vast amounts of patient data, including genetic information, lifestyle factors, and treatment outcomes, machine learning models are enabling more personalized treatment plans. This tailored approach leads to:

- More effective treatments

- Reduced side effects

- Improved patient outcomes

Case Study: IBM Watson for Oncology

IBM’s Watson for Oncology uses natural language processing and machine learning to analyze patient medical records, research papers, and clinical trials. It then provides evidence-based treatment recommendations to oncologists, helping them make more informed decisions.

In a study at the Manipal Comprehensive Cancer Center in India, Watson’s recommendations concorded with the tumor board in 93% of breast cancer cases.

Learn more about IBM Watson for OncologyFinance and Fintech

The financial sector has been quick to adopt machine learning techniques, using them to enhance decision-making, improve risk assessment, and provide personalized services.

Algorithmic Trading

Machine learning models analyze vast amounts of market data in real-time to make trading decisions. These algorithms can:

- Identify trading opportunities

- Optimize portfolio allocations

- Execute trades at high speeds

Fraud Detection

By analyzing patterns in transaction data, machine learning models can identify potentially fraudulent activities with high accuracy. This includes:

- Credit card fraud

- Insurance claim fraud

- Money laundering

Credit Scoring

Traditional credit scoring models are being enhanced or replaced by machine learning techniques that can analyze a broader range of data points, leading to:

- More accurate risk assessments

- Financial inclusion for underserved populations

- Faster loan approval processes

Personalized Banking

Machine learning is powering the next generation of personalized banking services, including:

- Chatbots for customer service

- Robo-advisors for investment management

- Customized financial product recommendations

| Traditional Finance | ML-Powered Finance |

|---|---|

| Manual fraud detection | Automated, real-time fraud detection |

| Limited credit scoring models | Comprehensive, data-driven credit scoring |

| Rule-based trading | Adaptive, AI-driven trading strategies |

| Generic financial advice | Personalized financial recommendations |

E-commerce and Retail

Machine learning techniques are reshaping the retail landscape, both online and offline, by personalizing customer experiences and optimizing operations.

Recommendation Systems

One of the most visible applications of machine learning in e-commerce is product recommendation. These systems use techniques such as:

- Collaborative filtering

- Content-based filtering

- Hybrid approaches

To suggest products that are likely to interest individual customers, leading to increased sales and customer satisfaction.

Demand Forecasting

By analyzing historical sales data, seasonal trends, and external factors, machine learning models can accurately predict future demand. This enables:

- Optimized inventory management

- Reduced waste

- Improved supply chain efficiency

Price Optimization

Dynamic pricing models powered by machine learning can adjust prices in real-time based on factors such as:

- Competitor pricing

- Demand fluctuations

- Customer segments

This leads to maximized revenue and improved competitiveness.

Customer Segmentation

Machine learning techniques like clustering can identify distinct customer segments based on behavior, preferences, and demographics. This enables:

- Targeted marketing campaigns

- Personalized promotions

- Improved customer retention strategies

Autonomous Vehicles and Robotics

Machine learning is at the heart of the autonomous vehicle revolution and the advancement of robotics.

Self-driving Cars

Autonomous vehicles rely heavily on machine learning techniques for:

- Object detection and classification

- Path planning and navigation

- Decision-making in complex traffic scenarios

These systems use a combination of supervised learning (for object recognition) and reinforcement learning (for decision-making) to navigate safely in diverse environments.

Industrial Robotics

In manufacturing and logistics, machine learning is enhancing robotic systems by enabling:

- Adaptive control systems

- Predictive maintenance

- Collaborative robots (cobots) that can safely work alongside humans

Drone Technology

Machine learning is powering advancements in drone technology, including:

- Autonomous navigation

- Object tracking and following

- Aerial imagery analysis for applications like agriculture and search-and-rescue

Social Media and Content Recommendation

Social media platforms and content providers heavily rely on machine learning to keep users engaged and deliver personalized experiences.

News Feed Algorithms

Platforms like Facebook and LinkedIn use sophisticated machine learning models to determine what content to show in a user’s feed. These algorithms consider factors such as:

- User interactions

- Content relevance

- Post recency

- Social connections

Content Moderation

Machine learning models, particularly in natural language processing and computer vision, are used to automatically detect and flag inappropriate content, including:

- Hate speech

- Violent imagery

- Fake news and misinformation

Trend Detection

By analyzing vast amounts of user-generated content, machine learning algorithms can identify emerging trends and viral content in real-time.

Cybersecurity and Fraud Detection

As cyber threats become more sophisticated, machine learning techniques are playing a crucial role in defending against attacks and detecting fraudulent activities.

Intrusion Detection Systems

Machine learning models can analyze network traffic patterns to identify potential security breaches, including:

- Zero-day attacks

- Distributed Denial of Service (DDoS) attacks

- Malware infections

Phishing Detection

Natural language processing and machine learning techniques are used to identify phishing emails and websites with high accuracy.

Behavioral Biometrics

Advanced authentication systems use machine learning to analyze user behavior patterns, such as:

- Typing rhythm

- Mouse movements

- Device handling

This provides an additional layer of security beyond traditional passwords.

Environmental Monitoring and Climate Change

Machine learning techniques are being applied to address some of the world’s most pressing environmental challenges.

Climate Modeling

Complex climate models use machine learning to:

- Improve prediction accuracy

- Analyze historical climate data

- Simulate future climate scenarios

Biodiversity Monitoring

Machine learning is used to process and analyze data from various sources, including:

- Satellite imagery

- Camera traps

- Audio recordings

This helps in tracking species populations, detecting deforestation, and monitoring ecosystem health.

Energy Optimization

In the renewable energy sector, machine learning is used for:

- Predicting energy production from solar and wind farms

- Optimizing energy distribution in smart grids

- Improving energy efficiency in buildings

“Machine learning is not just transforming industries; it’s helping us tackle some of humanity’s greatest challenges, from climate change to healthcare. The applications we’re seeing today are just the tip of the iceberg.”

– Dr. Andrew Ng, Co-founder of Coursera and Former Head of Google Brain

These real-world applications demonstrate the transformative power of machine learning techniques across diverse sectors. As these technologies continue to evolve and new techniques emerge, we can expect even more innovative and impactful applications in the future.

Learn more about machine learning applications in various industries

In the next section, we’ll explore the ethical considerations surrounding the widespread adoption of machine learning techniques, including issues of bias, privacy, and transparency.

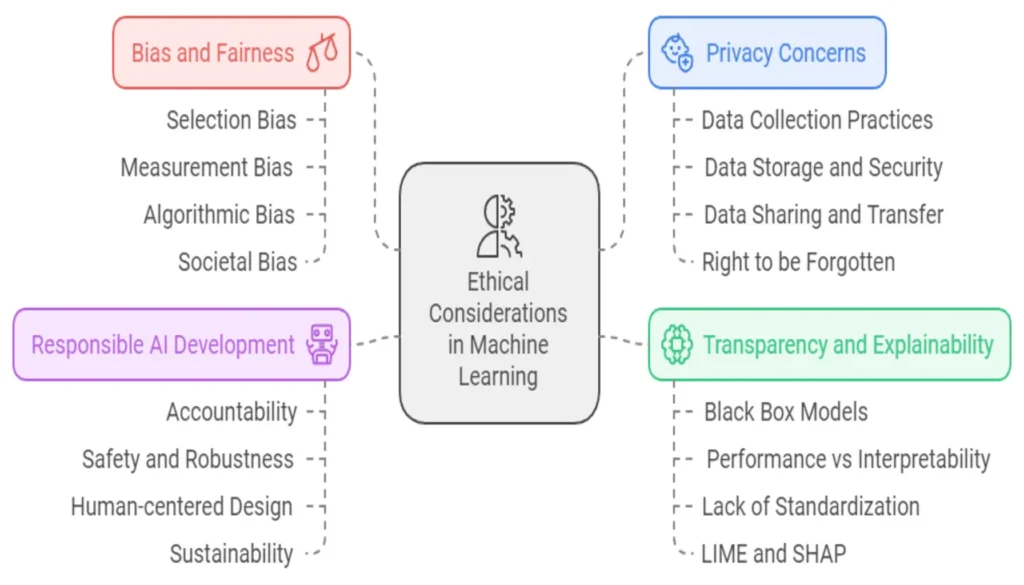

Ethical Considerations in Machine Learning

As machine learning techniques become increasingly integrated into our daily lives, it’s crucial to address the ethical implications of these powerful technologies. This section explores the key ethical considerations in machine learning, focusing on bias and fairness, privacy concerns, transparency, and responsible AI development.

Bias and fairness in machine learning models

Machine learning models are only as good as the data they’re trained on. When this data contains inherent biases, the resulting models can perpetuate and even amplify these biases, leading to unfair or discriminatory outcomes.

Types of bias in machine learning:

- Selection bias: When the data used to train a model doesn’t accurately represent the population it’s intended to serve.

- Measurement bias: Occurs when the features or labels in the training data are systematically mismeasured or misrepresented.

- Algorithmic bias: Arises from the choice of algorithm or model architecture, which may be inherently biased towards certain outcomes.

- Societal bias: Reflects existing societal prejudices and stereotypes present in the training data.

To address these issues, researchers and practitioners are developing various techniques:

| Technique | Description |

|---|---|

| Fairness constraints | Incorporating fairness metrics directly into the model’s objective function |

| Data preprocessing | Techniques like resampling or reweighting to balance representation in training data |

| Post-processing methods | Adjusting model outputs to ensure fairness across different groups |

| Adversarial debiasing | Training models to be invariant to sensitive attributes |

It’s important to note that achieving perfect fairness is often impossible, as different fairness metrics can be mutually exclusive. The goal is to find an acceptable balance that minimizes harm and promotes equitable outcomes.

Learn more about fairness in machine learning

Privacy concerns and data protection

As machine learning models require vast amounts of data to train effectively, concerns about data privacy and protection have come to the forefront. Key issues include:

- Data collection practices: Ensuring informed consent and transparency in data collection.

- Data storage and security: Protecting sensitive information from breaches and unauthorized access.

- Data sharing and transfer: Maintaining privacy when sharing data between organizations or across borders.

- Right to be forgotten: Addressing individuals’ requests for data deletion and model unlearning.

To address these concerns, several techniques and frameworks have been developed:

- Differential privacy: A mathematical framework that adds controlled noise to data, making it difficult to extract information about specific individuals.

- Federated learning: Allows models to be trained on decentralized data, without the need to share raw data.

- Homomorphic encryption: Enables computations on encrypted data, preserving privacy throughout the machine learning pipeline.

- Secure multi-party computation: Allows multiple parties to jointly compute a function over their inputs while keeping those inputs private.

Transparency and explainability of models

As machine learning models become more complex, ensuring their transparency and explainability becomes increasingly challenging. This is particularly crucial in high-stakes domains like healthcare, finance, and criminal justice, where model decisions can have significant impacts on individuals’ lives.

Challenges in model explainability:

- Black box models: Many advanced machine learning techniques, particularly deep learning models, are inherently opaque.

- Trade-off between performance and interpretability: Often, more complex (and less interpretable) models perform better on given tasks.

- Lack of standardization: There’s no universal agreement on what constitutes a sufficient explanation for a model’s decision.

Approaches to improve model explainability:

- LIME (Local Interpretable Model-agnostic Explanations): Explains individual predictions by approximating the model locally with an interpretable one.

- SHAP (SHapley Additive exPlanations): Uses game theory concepts to attribute feature importance for individual predictions.

- Attention mechanisms: In neural networks, attention layers can provide insights into which parts of the input the model focuses on.

- Rule extraction: Deriving interpretable rules from complex models that approximate their behavior.

Explore more about explainable AI

Responsible AI development and deployment

Responsible AI development encompasses all the previously discussed ethical considerations and extends to the broader impact of AI systems on society. Key principles for responsible AI include:

- Accountability: Clearly defining who is responsible for AI systems and their decisions.

- Safety and robustness: Ensuring AI systems perform reliably and safely, even in unforeseen circumstances.

- Human-centered design: Developing AI systems that augment and empower human capabilities rather than replace them.

- Sustainability: Considering the environmental impact of AI, particularly the energy consumption of large models.

To promote responsible AI development and deployment, various organizations and governments have proposed ethical guidelines and frameworks. Here’s a comparison of some prominent ones:

| Framework | Key Principles |

|---|---|

| IEEE Ethically Aligned Design | Human Rights, Well-being, Data Agency, Effectiveness, Transparency, Accountability |

| EU Ethics Guidelines for Trustworthy AI | Human Agency, Technical Robustness, Privacy, Transparency, Diversity, Societal Well-being, Accountability |

| OECD AI Principles | Inclusive growth, Sustainable development, Human-centered values, Fairness, Transparency, Robustness, Accountability |

Implementing these principles requires a multidisciplinary approach, involving not just data scientists and machine learning engineers, but also ethicists, legal experts, policymakers, and domain specialists.

As we continue to advance machine learning techniques, it’s crucial to keep these ethical considerations at the forefront of our development and deployment processes. By doing so, we can harness the power of machine learning to create systems that are not only technically proficient but also fair, transparent, and beneficial to society as a whole.

In conclusion, addressing ethical considerations in machine learning is an ongoing process that requires vigilance, collaboration, and a commitment to responsible innovation. As practitioners and researchers in this field, we have a duty to ensure that our machine learning techniques are developed and deployed in ways that respect individual rights, promote fairness, and contribute positively to society.

Future Trends in Machine Learning Techniques

As we stand on the cusp of a new era in artificial intelligence, several exciting trends are shaping the future of machine learning techniques. These emerging approaches promise to make AI more accessible, efficient, and capable of solving increasingly complex problems. Let’s explore these cutting-edge developments that are set to redefine the landscape of machine learning.

Automated Machine Learning (AutoML)

Automated Machine Learning, or AutoML, is revolutionizing the way we approach machine learning projects. This technology aims to automate the end-to-end process of applying machine learning to real-world problems, making AI more accessible to non-experts and increasing the efficiency of data scientists.

Key features of AutoML:

- Automated feature engineering

- Algorithm selection and hyperparameter tuning

- Model architecture search

- Automated model deployment and monitoring

AutoML is not just a time-saver; it’s democratizing machine learning, allowing organizations without extensive data science teams to leverage the power of AI. According to a Gartner report , by 2025, 70% of new applications developed by organizations will use machine learning or artificial intelligence technologies.

Benefits of AutoML

- Reduced time-to-model deployment

- Improved model performance

- Democratization of machine learning

- Consistent and reproducible results

Edge AI and TinyML

As the Internet of Things (IoT) continues to expand, there’s a growing need for AI capabilities on edge devices. Edge AI and TinyML are addressing this challenge by bringing machine learning to resource-constrained devices.

Edge AI refers to AI algorithms processed locally on a hardware device, while TinyML focuses on running machine learning models on microcontrollers and other devices with extremely limited computing power and memory.

Key advantages of Edge AI and TinyML:

- Reduced latency: Real-time processing without cloud communication delays

- Enhanced privacy: Data stays on the device, reducing privacy concerns

- Lower power consumption: Efficient processing for battery-powered devices

- Offline functionality: AI capabilities even without internet connectivity

Applications of Edge AI and TinyML

| Domain | Examples |

|---|---|

| Consumer Electronics | Smart speakers, wearables |

| Industrial IoT | Predictive maintenance, quality control |

| Healthcare | Remote patient monitoring, fall detection |

| Agriculture | Crop disease detection, precision farming |

Neuro-symbolic AI

Neuro-symbolic AI represents a promising fusion of neural networks and symbolic reasoning, aiming to combine the learning capabilities of deep learning with the logical reasoning of symbolic AI.

This hybrid approach addresses some of the limitations of pure deep learning systems, such as their need for large amounts of data and lack of interpretability. By incorporating symbolic knowledge, neuro-symbolic systems can:

- Reason with fewer examples

- Provide more interpretable decisions

- Integrate domain knowledge more effectively

- Handle complex, multi-step reasoning tasks

Researchers at MIT-IBM Watson AI Lab are at the forefront of developing neuro-symbolic AI systems, working on applications ranging from visual question answering to robotic task planning.

Continual and Lifelong Learning

Traditional machine learning models often struggle with “catastrophic forgetting” – the tendency to forget previously learned information upon learning new tasks. Continual and lifelong learning techniques aim to create AI systems that can accumulate knowledge over time, much like humans do.

Key aspects of continual learning:

- Task-incremental learning: Learning new tasks while retaining knowledge of previous ones

- Domain-incremental learning: Adapting to new domains without forgetting old ones

- Class-incremental learning: Adding new classes to classification problems over time

Challenges in Continual Learning

- Balancing stability and plasticity

- Efficient memory management

- Avoiding negative transfer

- Handling concept drift

Advances in continual learning are crucial for developing more adaptive and general-purpose AI systems, particularly in dynamic environments like robotics and personal assistants.

AI for Scientific Discovery

The application of machine learning techniques to accelerate scientific discovery is one of the most exciting frontiers in AI research. From drug discovery to materials science, AI is helping scientists explore vast solution spaces and uncover patterns that might be missed by human researchers.

Some notable areas where AI is making significant contributions to scientific discovery include:

- Drug Discovery: AI models are predicting drug-target interactions and designing novel molecules with desired properties.

- Materials Science: Machine learning is accelerating the discovery of new materials with specific characteristics, such as better battery components or more efficient solar cells.

- Astronomy: AI algorithms are helping astronomers detect and classify celestial objects, including the discovery of new exoplanets.

- Climate Science: Machine learning models are improving climate predictions and helping to identify patterns in complex climate data.

A prime example of AI’s potential in scientific discovery is DeepMind’s AlphaFold , which has made groundbreaking progress in protein structure prediction, a longstanding challenge in biology.

Impact of AI on Scientific Research

- Accelerated hypothesis generation

- Enhanced data analysis and pattern recognition

- Optimization of experimental design

- Automated literature review and knowledge synthesis

As these future trends in machine learning techniques continue to evolve, they promise to push the boundaries of what’s possible with AI. From making machine learning more accessible and efficient with AutoML to enabling AI on tiny devices with Edge AI and TinyML, these advancements are set to transform various industries and scientific disciplines.

The fusion of neural and symbolic approaches in neuro-symbolic AI, along with the development of continual learning systems, points towards more robust and adaptable AI systems. Meanwhile, the application of AI to scientific discovery holds the potential to accelerate breakthroughs that could have profound impacts on society.

As we look to the future, it’s clear that machine learning techniques will continue to play a pivotal role in shaping our technological landscape. By staying informed about these trends and their potential applications, businesses and researchers can position themselves at the forefront of the AI revolution.

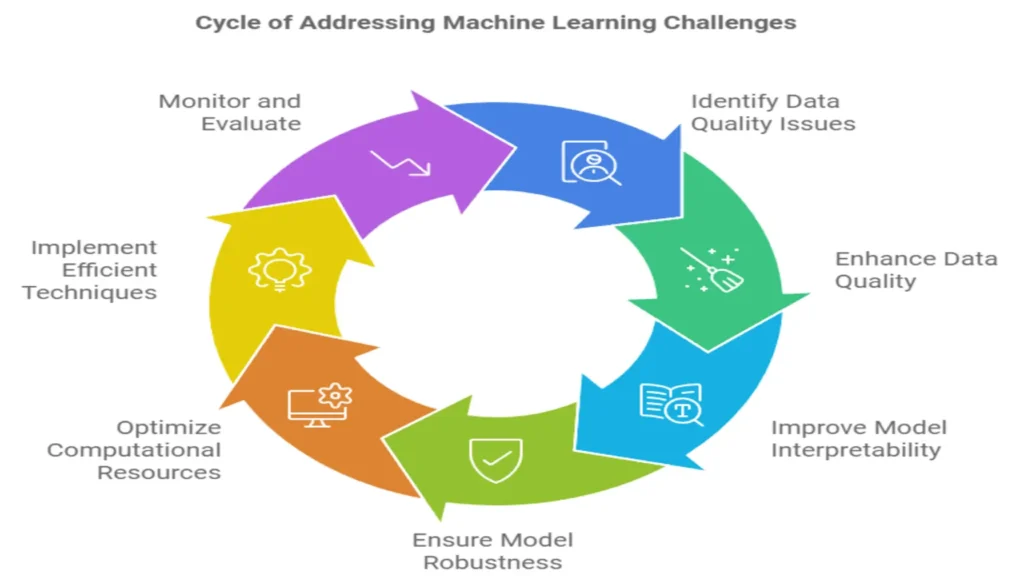

Challenges and Limitations of Current Machine Learning Techniques

While machine learning techniques have made remarkable strides in recent years, they are not without their challenges and limitations. Understanding these constraints is crucial for researchers, practitioners, and decision-makers to effectively leverage machine learning in real-world applications. Let’s delve into some of the most pressing challenges facing the field today.

Data Quality and Quantity Issues

The adage “garbage in, garbage out” holds particularly true in machine learning. The quality and quantity of data significantly impact the performance of machine learning models.

Data Quality Challenges:

- Noise and Errors: Real-world data often contains noise, errors, or inconsistencies that can mislead models.

- Bias: Data may reflect societal biases, leading to biased model outputs.

- Incompleteness: Missing or partial data can skew model predictions.

- Inconsistency: Data collected from multiple sources may have inconsistent formats or definitions.

Data Quantity Challenges:

- Insufficient Data: Some domains lack large, labeled datasets necessary for training complex models.

- Class Imbalance: Certain classes may be underrepresented in the dataset, leading to biased models.

- Overfitting: With limited data, models may memorize training examples rather than learning generalizable patterns.

To illustrate the impact of data quality and quantity on model performance, consider the following interactive chart:

This chart demonstrates how both data quality and quantity affect model accuracy. High-quality data leads to better performance, especially as the dataset size increases.

Learn more about the importance of data quality in machine learning

Interpretability of Complex Models

As machine learning models become more sophisticated, they often become less interpretable. This “black box” nature of complex models, particularly deep neural networks, poses significant challenges:

- Lack of Transparency: It’s often unclear how a model arrives at its decisions, making it difficult to trust or debug.

- Regulatory Compliance: In regulated industries like healthcare and finance, the inability to explain model decisions can be a significant barrier to adoption.

- Ethical Concerns: When models make important decisions affecting people’s lives, the lack of interpretability raises ethical questions.

Several techniques have been developed to address this challenge:

- LIME (Local Interpretable Model-agnostic Explanations)

- SHAP (SHapley Additive exPlanations)

- Feature Importance Analysis

- Partial Dependence Plots

Here’s a simple visualization of how feature importance might be displayed for a hypothetical model:

Generalization and Robustness

The ability of machine learning models to generalize beyond their training data and remain robust in the face of adversarial inputs or distribution shifts is a significant challenge.

Generalization Issues:

- Overfitting: Models may perform well on training data but fail to generalize to new, unseen data.

- Distribution Shift: When the distribution of data in the real world differs from the training data, model performance can degrade significantly.

Robustness Challenges:

- Adversarial Attacks: Carefully crafted inputs can fool models into making incorrect predictions.

- Out-of-Distribution Samples: Models often struggle with inputs that are significantly different from their training data.

To improve generalization and robustness, researchers are exploring techniques such as:

- Data Augmentation

- Transfer Learning

- Ensemble Methods

- Robust Optimization

Computational Resources and Energy Consumption

The increasing complexity of machine learning models, particularly in deep learning, has led to significant computational and energy demands.

Key Concerns:

- Hardware Requirements: Training state-of-the-art models often requires expensive, specialized hardware like GPUs or TPUs.

- Energy Consumption: The energy required to train large models can have substantial environmental impacts.

- Scalability: As datasets grow larger, the computational resources required scale dramatically, potentially limiting widespread adoption.

To illustrate the growing computational demands, consider this table of estimated training costs for some well-known language models:

| Model | Parameters | Estimated Training Cost (USD) |

|---|---|---|

| BERT-large | 340M | $7,000 |

| GPT-3 | 175B | $4,600,000 |

| PaLM | 540B | $9,000,000 |

Researchers are actively working on addressing these challenges through:

- Model Compression Techniques: Pruning, quantization, and knowledge distillation.

- Efficient Architectures: Designing models that are inherently more computationally efficient.

- Green AI: Developing approaches to reduce the carbon footprint of AI research and deployment.

Explore more about the environmental impact of AI

In conclusion, while machine learning techniques have achieved remarkable successes, addressing these challenges is crucial for the continued advancement and responsible deployment of AI systems. Researchers and practitioners must remain vigilant in developing solutions that not only push the boundaries of what’s possible but also ensure that machine learning can be applied ethically, efficiently, and effectively in real-world scenarios.

Getting Started with Machine Learning

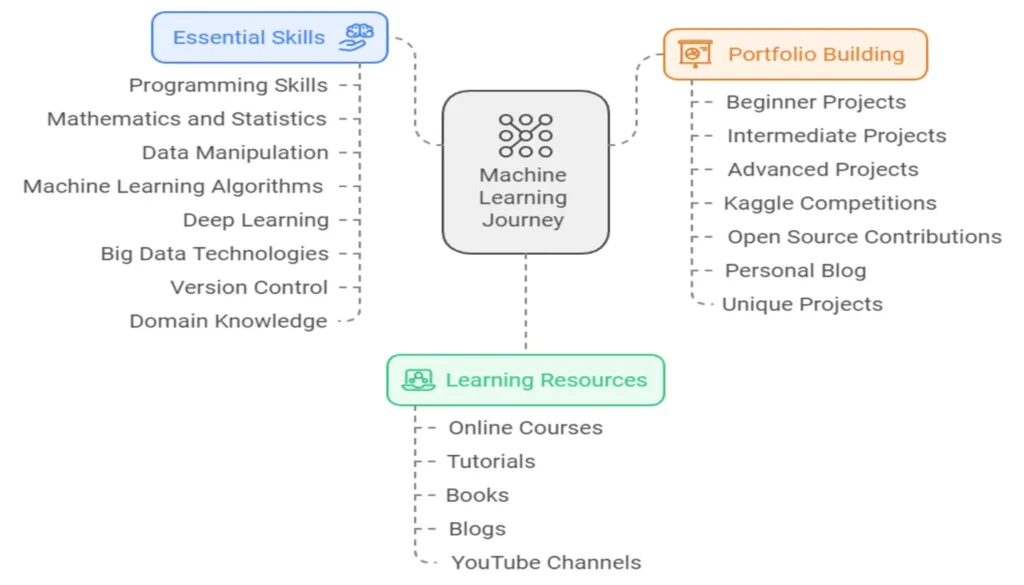

Embarking on a journey into the world of machine learning can be both exciting and daunting. This section will provide you with a roadmap to begin your machine learning adventure, covering essential skills, learning resources, and practical project ideas to build your portfolio.

Essential skills for aspiring machine learning practitioners

To become proficient in machine learning techniques, aspiring practitioners should focus on developing a robust skill set. Here are the key areas to concentrate on:

- Programming Skills

- Python (50)

- R (optional but beneficial)

- SQL for database management

- Mathematics and Statistics

- Linear Algebra

- Calculus

- Probability and Statistics

- Data Manipulation and Analysis

- Data cleaning and preprocessing (27)

- Exploratory Data Analysis (EDA)

- Feature engineering (27)

- Machine Learning Algorithms

- Supervised learning techniques (35)

- Unsupervised learning methods

- Reinforcement learning basics

- Deep Learning

- Neural network architectures

- Framework proficiency (TensorFlow, PyTorch)

- Big Data Technologies

- Hadoop ecosystem

- Apache Spark

- Version Control and Collaboration

- Git and GitHub

- Domain Knowledge

- Understanding of the field you’re applying ML to

To visualize the importance of these skills, let’s look at a skill heatmap:

The darker the color, the more critical the skill is for a machine learning practitioner. As you can see, programming, mathematics, and machine learning algorithms form the core of the required skill set.

Online courses, tutorials, and resources

The internet is brimming with high-quality resources for learning machine learning techniques. Here’s a curated list of some of the best online courses, tutorials, and resources to kickstart your learning journey:

- Online Courses

- Tutorials and Documentation

- Books

- “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” by Aurélien Géron

- “Python for Data Analysis” by Wes McKinney

- “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville

- Blogs and Websites

- YouTube Channels

Building a portfolio of machine learning projects

Creating a strong portfolio is crucial for demonstrating your skills to potential employers or clients. Here’s a step-by-step guide to building an impressive machine learning portfolio:

- Start with Beginner-Friendly Projects

- Iris flower classification

- Titanic survival prediction

- Boston housing price prediction

- Move to Intermediate Projects

- Image classification using CNNs

- Sentiment analysis of movie reviews

- Stock price prediction using time series analysis

- Take on Advanced Projects

- Implementing a recommendation system

- Building a chatbot using NLP techniques

- Creating a generative model (e.g., using GANs)

- Participate in Kaggle Competitions

- Join ongoing competitions

- Work on past competitions to benchmark your skills

- Contribute to Open Source Projects

- Find ML-related projects on GitHub

- Contribute code, documentation, or bug fixes

- Create a Personal Blog or Website

- Document your learning journey

- Write tutorials and share insights

- Develop a Unique Project

- Identify a problem in your field of interest

- Apply ML techniques to solve it innovatively

Here’s a project difficulty progression chart to guide your portfolio building:

Remember, the key to mastering machine learning techniques is consistent practice and application. As you progress through these projects, you’ll gain hands-on experience with various algorithms , data preprocessing methods, and model evaluation techniques.

By following this guide, you’ll be well on your way to becoming proficient in machine learning techniques. The journey may be challenging, but the rewards of working in this cutting-edge field are immense. Keep learning, stay curious, and don’t be afraid to tackle complex problems – that’s where the real growth happens!

Explore more project ideas on Kaggle

Conclusion

As we reach the end of our comprehensive journey through the world of machine learning techniques, it’s time to reflect on the key concepts we’ve explored and look ahead to the exciting future of AI and machine learning.

Recap of key machine learning techniques

Throughout this guide, we’ve delved into a wide array of machine learning techniques, each with its unique strengths and applications. Let’s recap some of the most important ones:

| Technique | Key Features | Common Applications |

|---|---|---|

| Supervised Learning |

| Classification, Regression, Image Recognition |

| Unsupervised Learning |

| Clustering, Anomaly Detection, Dimensionality Reduction |

| Reinforcement Learning |

| Game AI, Robotics, Autonomous Systems |

| Deep Learning |

| Natural Language Processing, Computer Vision, Speech Recognition |

These techniques form the foundation of modern machine learning, enabling us to tackle complex problems across various domains. From the simplicity of linear regression to the complexity of deep neural networks, each technique plays a crucial role in the AI ecosystem.

The future of AI and machine learning

The field of machine learning is evolving at a breakneck pace, with new techniques and applications emerging regularly. As we look to the future, several exciting trends are shaping the landscape of AI and machine learning:

- Explainable AI (XAI): As machine learning models become more complex, there’s a growing need for transparency and interpretability. XAI techniques aim to make black-box models more understandable, fostering trust and enabling wider adoption in critical domains like healthcare and finance.

- AutoML and Democratization: Automated Machine Learning (AutoML) tools are making it easier for non-experts to leverage the power of machine learning. This democratization of AI will lead to more widespread adoption across industries and potentially groundbreaking applications we haven’t yet imagined.

- Edge AI: The shift towards edge computing is bringing machine learning capabilities to devices with limited resources. This trend will enable real-time, low-latency AI applications in IoT devices, smartphones, and other edge devices.

- Quantum Machine Learning: The intersection of quantum computing and machine learning promises to revolutionize the field, potentially solving complex problems that are currently intractable for classical computers.

- AI for Scientific Discovery: Machine learning techniques are increasingly being applied to accelerate scientific research in fields like drug discovery, materials science, and climate modeling.

These advancements are not without challenges. As AI becomes more pervasive, we must grapple with important ethical considerations, such as:

- Privacy and Data Protection: Ensuring the responsible use of personal data in machine learning applications.

- Bias and Fairness: Addressing and mitigating biases in AI systems to ensure equitable outcomes for all.

- AI Safety: Developing robust techniques to ensure AI systems behave reliably and safely, especially in critical applications.

The future of machine learning is bright, with the potential to solve some of humanity’s most pressing challenges. From climate change mitigation to personalized medicine, machine learning techniques will play a pivotal role in shaping our future.